Your chatbot understands "I want to book a flight" perfectly, but completely fumbles "Can you help me reserve a plane ticket?" Sound familiar? Intent confusion, lost conversation context, and limited testing capabilities plague many chatbot builders, turning what should be helpful virtual assistants into frustrating dead ends for users.

This guide breaks down the most common NLP challenges in chatbots and delivers practical solutions you can implement immediately. You'll learn how to sharpen intent recognition with proper training data, maintain context across multi-turn conversations, build seamless multi-step workflows, and leverage personalization to create chatbots that actually understand your users.

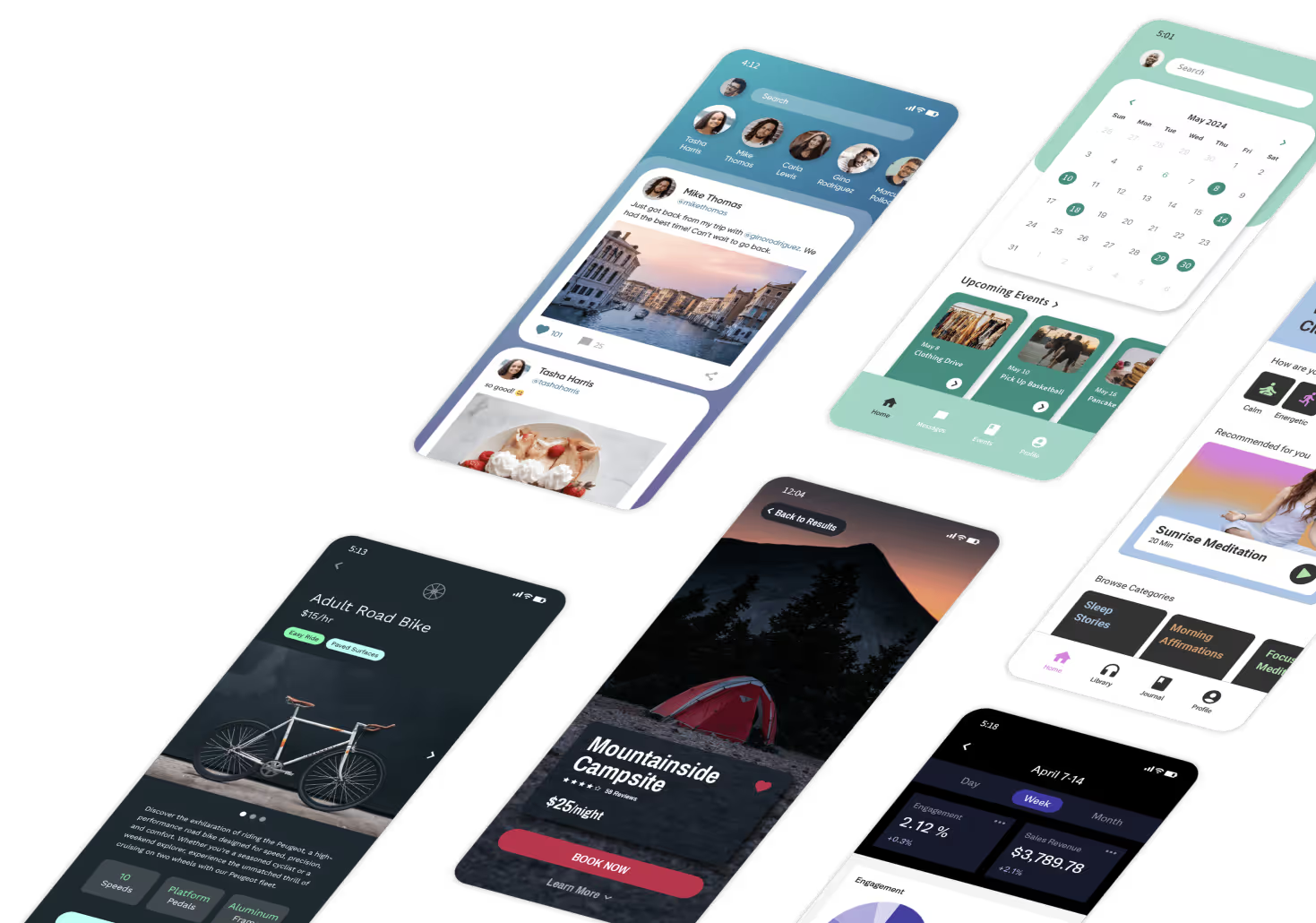

Adalo, a no-code app builder for database-driven web apps and native iOS and Android apps—one version across all three platforms, published to the Apple App Store and Google Play, makes implementing these best practices significantly easier. With built-in integrations for AI tools like OpenAI's GPT, features like "Ask ChatGPT" actions, and robust database capabilities for context storage, you can create sophisticated NLP chatbots without writing a single line of code.

Build a Free AI Chatbot in Minutes (No Coding Required)

Common NLP Problems in Chatbot Builders

Chatbot platforms for NLP applications often encounter recurring challenges that can disrupt the user experience. Tackling these issues head-on is crucial to improving chatbot performance and user satisfaction.

Poor Intent Recognition

One major issue is the bot's inability to correctly identify user intentions. This often stems from imbalanced training data or overly similar intent phrases. For instance, if one intent has significantly more training examples than another, the model may become biased, leading to misclassifications.

A classic problem arises when intents like "book_bus" and "book_train" are treated as separate categories. If the training phrases for these intents are too similar, the bot may confuse them. A better strategy is to consolidate these into a single "booking" intent and use entities to differentiate between options like buses and trains.

Another pitfall is relying on machine-generated training data, which can introduce sentences that users would never naturally say. This can cause the model to overfit, reducing its ability to handle real-world conversations effectively.

"The accuracy of your bot stands or falls with the quality of your expressions, so make sure to spend enough time on this, as well as reviewing them regularly." - Chatlayer

For best results, each intent should ideally include 40 to 50 training examples. However, for more complex scenarios, this number may need to increase to 200 or even 400 expressions per intent. Simpler intents like "yes" or "no" can work with as few as five examples, but anything more nuanced requires significantly more data. Additionally, without a dedicated out-of-scope intent, chatbots may attempt to force irrelevant queries into existing categories, leading to frustrating user experiences.

Lost Context in Multi-Turn Conversations

Many NLP platforms struggle with maintaining context in multi-turn conversations. Often, these tools treat each user message as a standalone input, ignoring prior exchanges. This "stateless" approach means chatbots frequently lose track of the conversation, forcing users to repeat themselves—a frustrating experience for anyone.

Context limitations are further complicated by the finite memory of Large Language Models. If too much conversation history is included, the bot may "forget" earlier parts of the chat due to context window constraints.

"A great conversational bot doesn't require users to type too much, talk too much, repeat themselves several times, or explain things that the bot should automatically know and remember." - Microsoft

Another downside of maintaining long conversation histories is the increased cost. For example, using GPT-3.5-turbo through integrations costs roughly $0.002 per 1,000 tokens, and including the full chat history in every exchange can quickly drive up expenses. This is where unlimited database records become essential—platforms with storage caps force you to choose between conversation quality and cost management.

Multi-Step Query Processing Issues

When conversations involve multiple steps or complex workflows, things can get messy. For example, if a user introduces a new topic while the bot is gathering information for an ongoing task, the bot may become confused, lose track of its current task, or provide irrelevant responses. This can lead to processes restarting unnecessarily or breaking down entirely.

Visual workflow builders often exacerbate these problems. Managing multi-step workflows with conditional logic can become cumbersome, especially as complexity grows. Latency is another concern—since Large Language Models need time to process requests, adding multiple layers of actions or lengthy prompts can slow response times noticeably.

Bots that need to query large databases during multi-step interactions are particularly prone to lag. Without proper database optimization to store conversation states, the bot may fail to remember critical information across multiple turns. Platforms with no data caps on paid plans eliminate this constraint, allowing you to store comprehensive conversation histories without worrying about hitting limits.

Limited Testing and Optimization Tools

Another shortcoming of many platforms is the lack of robust tools for testing and optimizing chatbot performance. Developers often struggle to identify weak spots, such as underperforming intents or points where users abandon conversations. Additionally, visual interfaces can make it difficult to systematically audit conversation flows. Debugging logical errors across dozens of interconnected actions and conditional branches can be tedious and time-consuming.

Solutions for NLP Challenges in Chatbots

Addressing NLP challenges in chatbots often involves leveraging pre-trained AI models, maintaining efficient data management, and using analytics to refine performance. The following solutions can be implemented without coding expertise.

Use Pre-Trained AI Models for Intent Recognition

Building an NLP model from scratch can be daunting, but integrating pre-trained models like OpenAI's GPT-3.5 Turbo simplifies the process. Platforms such as Adalo allow you to connect directly by adding your OpenAI "Secret Key" in the app's settings. From there, you can use the "Ask ChatGPT" action for tasks like text processing, sentiment analysis, and language translation.

For more complex workflows, tools like n8n act as middleware, offering specialized nodes (e.g., "Message a Model" or "Classify Text") to handle multi-step processes while keeping costs predictable by charging only for complete workflows.

To enhance intent recognition, consider incorporating pre-trained word embeddings like spaCy or BERT. These models excel at understanding linguistic relationships—for example, recognizing that "apples" and "pears" are conceptually related—even with limited training data. Additionally, tools like Duckling (for structured data like dates or distances) and spaCy (for extracting names and places) can reduce the need for extensive manual annotation.

Keep prompts concise to minimize latency. For example, include clear instructions like, "Only return the updated sentence, don't add any extra text." This ensures the AI stays focused on the task. Enable settings for automatic text adjustments, such as casing and diacritics, to prevent the model from being overly sensitive to minor variations.

Store Context Data for Seamless Conversations

Maintaining context across multiple exchanges is crucial for a smooth chatbot experience. One method is to update a single database record with each user interaction. For instance, in Adalo, you can pass this record into the "History" field of your AI prompt, enabling the chatbot to reference past conversations. This transforms a stateless chatbot into one that remembers user interactions.

Beyond storing raw conversation history, use slots—categorical variables that hold specific data, such as user preferences or account details. Slots act as the chatbot's memory, allowing it to apply conditional logic based on stored values rather than unstructured text.

"Slots save values to your assistant's memory, and entities are automatically saved to slots that have the same name." - Rasa

Be mindful of token limits in large language models, as longer histories consume more resources. Use conversation history sparingly, periodically clearing it to avoid exceeding these limits. To reduce confusion in multi-turn dialogues, consolidate similar intents (e.g., "inform_name" and "inform_address") into one general "inform" intent, using slots or entities to differentiate between details. This approach also ensures consistent backend logic.

With unrestricted database storage on paid plans, you can maintain comprehensive conversation histories without worrying about hitting record limits—a common constraint on platforms like Bubble that charge based on Workload Units and cap database records.

Build Multi-Step Workflows with Conditional Logic

Visual workflow builders enable the creation of multi-step conversations with branching logic and conditional triggers. By referencing stored values (slots) at each step, your chatbot can decide the best course of action. For instance, if a user pauses a booking process to ask about pricing, the workflow can branch to address the pricing query and then return to the booking flow without losing progress.

To handle topic changes, use conditional checks to decide whether to pause the current task, save its state, and address the new query—or guide the user back to the original task. Including an "out-of-scope" intent ensures the chatbot can gracefully manage queries outside its domain.

Adalo's visual builder displays up to 400 screens at once on a single canvas, making it easier to visualize and manage complex conversation flows compared to platforms with limited viewport capabilities. This bird's-eye view helps you spot logical gaps and optimize user journeys across your entire chatbot experience.

Add Personalization and Sentiment Analysis

Personalization enhances user experience significantly. Store preferences in Adalo Collections and use Magic Text to dynamically tailor responses. For sentiment analysis, configure prompts to require simple sentiment tags like "Positive," "Negative," or "Neutral." This allows the chatbot to adjust its tone based on the user's emotional state.

Additionally, "developer" or "system" messages can define the chatbot's persona, tone, and business rules, ensuring a consistent and engaging experience. To guide the model's responses, include 3–5 examples of desired input/output pairs in your prompt setup—a technique known as few-shot learning. Use structured formats like Markdown headers or XML tags (e.g., <user_query>) to help the model distinguish between instructions, examples, and user data.

Key personalization strategies:

- Store user preferences and interaction history in your database

- Use sentiment analysis to adjust response tone dynamically

- Implement few-shot learning with 3-5 example input/output pairs

- Define consistent persona and business rules through system messages

Monitor Analytics for Continuous Improvement

Analytics play a key role in identifying areas for improvement. Track metrics like drop-off rates and intent success rates to pinpoint weak spots in your chatbot's performance.

Set confidence score thresholds to filter out ambiguous inputs. If the model's confidence falls below a set level, categorize the input as "None" rather than forcing it into an incorrect intent. Regularly review your training data and use synthetic data generation to balance datasets.

For example, pre-trained models can generate similar phrases to help you meet the recommended 40–50 training examples per intent (or up to 200–400 for complex scenarios). Advanced AI tools can even augment datasets, increasing them to as many as 25,000 utterances.

"The accuracy of your bot stands or falls with the quality of your expressions, so make sure to spend enough time on this, as well as reviewing them regularly." - Chatlayer

Adalo's X-Ray feature identifies performance issues before they affect users, helping you proactively optimize your chatbot's response times and database queries. This diagnostic capability is particularly valuable for chatbots handling high volumes of concurrent conversations.

Building NLP Chatbots with Adalo

Adalo's AI-powered platform makes it straightforward to create and deploy chatbots powered by natural language processing. With its visual interface—described as "easy as PowerPoint"—you can connect to AI models, store conversation histories, and deploy apps across multiple platforms from a single build. These features help sidestep common challenges in NLP development, enabling you to create efficient and responsive chatbots.

Why Adalo Works for NLP Chatbots

Adalo's architecture addresses the core challenges of chatbot development. The platform's modular infrastructure scales to serve apps with millions of monthly active users, with no upper ceiling—critical for chatbots that may experience sudden traffic spikes. Unlike app wrappers that hit speed constraints under load, Adalo's purpose-built architecture maintains performance at scale.

The late 2026 launch of Adalo 3.0 completely overhauled the backend infrastructure, making apps 3-4x faster than before. This speed improvement is particularly important for chatbots, where response latency directly impacts user experience. Most third-party platform ratings and comparisons predate this infrastructure overhaul, so older reviews may not reflect current performance capabilities.

Connecting NLP Models in Adalo

Adalo offers tools like the "Ask ChatGPT" Custom Action and External Collections to seamlessly integrate AI models, whether you're using OpenAI or custom large language models (LLMs). By providing API key integration and configurable endpoints, headers, and authentication, Adalo ensures flexibility and adaptability for various NLP tasks.

To get started, input your OpenAI "Secret Key" in Adalo's API Keys section. This key applies across all apps within your organization. Using Magic Text, you can pull data from the database or screen inputs directly into AI prompts, enabling tasks like intent recognition and more.

One of the standout features is flexibility—you're not tied to a single AI provider. Custom Actions, which are essential for NLP integration, are available with Adalo's Professional plan ($36/month, billed annually) or higher. This pricing includes unlimited usage with no App Actions charges, eliminating the bill shock that can occur with usage-based platforms.

"Before ChatGPT, each of these [NLP tasks] would've required its own tool or API, but now you can just use one simple tool and rely on the power of AI to make your apps better than ever." - Adalo

Looking ahead, AI Builder for prompt-based app creation and editing is due for release in early 2026, promising even faster chatbot development through natural language requests. Magic Start already generates complete app foundations from descriptions, and Magic Add lets you add features by simply describing what you want.

Managing Context with Adalo's Database

Adalo's relational database simplifies the process of managing conversation history. By storing data in Collections, you can ensure smooth, multi-turn dialogues by passing conversation history back to AI models using the "History" field.

For the best results, consider a dual-storage strategy: save each message and response as individual records in a "Messages" collection (for UI display) while maintaining a single "History" text property in a "Conversations" record. This allows you to provide context to AI models without overwhelming the system.

With no record limit cap on paid plans, you can store comprehensive conversation histories without worrying about database constraints. This is a significant advantage over platforms like Bubble, which impose record limits and charge based on Workload Units with calculations that can be unclear and unpredictable.

Be cautious with how much history you include in each AI prompt. Longer prompts consume more tokens, which can quickly fill the AI model's context window. To manage costs and avoid hitting limits, periodically clear older stored history while keeping the full record in your database for analytics and training purposes.

Deploying Chatbots Across Platforms with Adalo

Once your chatbot is ready, Adalo makes it easy to deploy across platforms. Its single-codebase architecture allows you to release your chatbot on iOS, Android, and the web simultaneously. The Staging Preview feature ensures consistent testing across platforms. Any updates you make in the editor are automatically pushed to all platforms, eliminating the hassle of managing multiple codebases.

This is a key differentiator from platforms like Bubble, whose mobile app solution is a wrapper for the web app. Wrappers can introduce performance challenges at scale and mean that one app version doesn't automatically update web, Android, and iOS apps deployed to their respective app stores. Adalo compiles to true native code, resulting in faster load times and smoother performance on mobile devices.

Over 3 million apps have been created on Adalo, processing 20 million+ daily data requests with 99%+ uptime. This track record demonstrates the platform's capability to handle large-scale deployments reliably.

Comparing Chatbot Building Platforms

When choosing a platform for your NLP chatbot, understanding the trade-offs between options helps you make an informed decision.

| Feature | Adalo | Bubble | FlutterFlow |

|---|---|---|---|

| Starting Price | $36/month | $59/month | $70/month per user |

| Database Records | Unlimited on paid plans | Limited by Workload Units | External database required |

| Usage Charges | None | Workload Units | Varies by database choice |

| Mobile Apps | True native iOS/Android | Web wrapper | True native |

| App Store Publishing | Included, unlimited updates | Limited re-publishing | Included |

| Technical Skill Required | No-code | No-code | Low-code (technical users) |

Bubble offers more customization options, but that flexibility often results in slower applications that suffer under increased load. Many Bubble users end up hiring experts to optimize performance—claims of millions of MAU are typically only achievable with professional help. The Workload Units pricing model can also create unpredictable costs as your chatbot scales.

FlutterFlow is a low-code platform designed for technical users. Users need to set up and manage their own external database, which requires significant learning complexity. This ecosystem is rich with experts because so many people need help, often spending significant sums chasing scalability. The builder also has a limited viewport, making it slower to see more than 2 screens at once compared to Adalo's 400-screen canvas view.

Glide and Softr focus on spreadsheet-based apps but don't support Apple App Store or Google Play Store publishing. Glide starts at $60/month with data record limits, while Softr starts at $167/month for Progressive Web Apps with record restrictions. For chatbots that need native mobile deployment, these platforms aren't viable options.

Conclusion

Creating NLP-powered chatbots without coding is not only possible but increasingly efficient. The common hurdles—poor intent recognition, lost conversational context, multi-step query challenges, lack of personalization, and limited testing options—can all be tackled with the right platform and approach.

AI-assisted platforms significantly reduce development time, cutting it by an impressive 60-80% compared to traditional methods. Gartner's forecast highlights that by 2026, 70% of new applications will rely on low-code or no-code technologies. The tools and techniques covered in this guide position you to be part of that shift.

The rise of generative AI is reshaping customer interactions. According to Zendesk's Customer Experience Trends Report, "70 percent of CX leaders believe bots are becoming skilled architects of highly personalized customer journeys." With platforms handling the technical complexity, you can launch your chatbot in days or weeks rather than months.

Adalo's combination of AI-assisted building, unlimited database storage, and true native mobile deployment makes it well-suited for chatbot development at any scale.

Related Blog Posts

- 8 Ways to Optimize Your No-Code App Performance

- How to Launch Your First Mobile App Without Coding

- App Idea Generator for Creative Minds

- Softr Alternatives and Open Source Options

FAQ

| Question | Answer |

|---|---|

| Why choose Adalo over other app building solutions? | Adalo is an AI-powered app builder that creates true native iOS and Android apps from a single codebase. Unlike web wrappers, it compiles to native code and publishes directly to both the Apple App Store and Google Play Store. With unlimited database records on paid plans and no usage-based charges, you avoid the bill shock and scaling constraints common on other platforms. |

| What's the fastest way to build and publish an app to the App Store? | Adalo's drag-and-drop interface and AI-assisted building let you go from idea to published app in days rather than months. The platform handles the complex App Store submission process, so you can focus on your chatbot's features and user experience instead of wrestling with certificates, provisioning profiles, and store guidelines. |

| Can I easily build an NLP chatbot without coding experience? | Yes. Adalo provides built-in integrations for AI tools like OpenAI's GPT, features like "Ask ChatGPT" actions, and robust database capabilities for context storage. The visual interface is described as "easy as PowerPoint," allowing you to create sophisticated NLP chatbots without writing code. |

| How do I improve intent recognition in my chatbot? | Use pre-trained AI models like OpenAI's GPT-3.5 Turbo and ensure each intent has 40-50 quality training examples (or up to 200-400 for complex scenarios). Consolidate similar intents and use entities to differentiate between options, and always include an out-of-scope intent to handle irrelevant queries gracefully. |

| How can I maintain conversation context in my chatbot? | Store conversation history in your database and pass it to AI prompts with each interaction. In Adalo, use Collections to save messages and update a single database record with each user interaction, then reference this history using the "History" field. Use slots to store specific user data like preferences, and periodically clear older history to manage token limits. |

| Which is more affordable, Adalo or Bubble? | Adalo starts at $36/month with unlimited usage and no record limits on paid plans. Bubble starts at $59/month with usage-based Workload Unit charges and database record limits. Adalo's predictable pricing eliminates the bill shock that can occur with Bubble's usage-based model as your chatbot scales. |

| Is Adalo better than Bubble for mobile chatbot apps? | For mobile apps, Adalo compiles to true native iOS and Android code, while Bubble's mobile solution is a web wrapper. Native apps load faster and perform better, especially under load. Adalo also publishes to both app stores from a single codebase with unlimited updates included. |

| Which is easier for beginners, Adalo or FlutterFlow? | Adalo is designed for non-technical users with a visual builder described as "easy as PowerPoint." FlutterFlow is a low-code platform for technical users that requires setting up and managing an external database. Adalo includes an integrated database with no additional setup required. |

| What plan do I need to integrate AI into my Adalo chatbot? | AI integration through Custom Actions requires Adalo's Professional plan ($36/month billed annually) or higher. This plan gives you access to the "Ask ChatGPT" Custom Action and External Collections, allowing you to connect to OpenAI or custom large language models for NLP tasks. |

| How do I handle multi-step conversations and topic changes? | Use visual workflow builders with conditional logic that references stored values (slots) at each step. When a user changes topics mid-conversation, your workflow can branch to address the new query and then return to the original task without losing progress. Include conditional checks to decide whether to pause the current task, save its state, or guide the user back. |