Improving API response times when working with legacy databases is all about addressing common bottlenecks like slow queries, outdated infrastructure, and inefficient data retrieval. These systems often struggle with issues like high latency, N+1 query problems, and missing indexes, which can lead to user frustration and operational slowdowns.

Key Takeaways:

- Query Optimization: Use tools like

EXPLAIN ANALYZE(PostgreSQL) or slow query logs (MySQL) to identify inefficiencies. Fix N+1 query issues with eager loading and optimize joins to reduce overhead. - Indexing: Add indexes to speed up

WHERE,JOIN, andORDER BYoperations. Composite indexes can handle multi-column filtering effectively. - Caching: Tools like Redis or Memcached reduce repetitive database calls, improving response times for read-heavy APIs.

- Connection Pooling: Reuse database connections to lower latency, especially in high-concurrency setups.

- Batching: Consolidate multiple reads or writes into single transactions to save resources.

Real-World Impact:

Switching from a local SQLite database to a cloud-hosted legacy database increased query times from 500ms to 4 seconds for 200 queries. However, techniques like caching and connection pooling reduced response times by up to 96%.

Solutions in Action:

Platforms like DreamFactory can transform legacy databases into REST APIs, simplifying integration and improving performance. Pairing this with tools like Adalo lets teams build modern apps that connect seamlessly to legacy systems without overhauling the backend.

By focusing on these optimizations, you can significantly improve API performance while extending the usability of legacy databases.

Rest API - Performance - Best Practices

Finding Performance Bottlenecks in API Integrations

Pinpointing bottlenecks is a critical step in addressing slow response times caused by legacy databases. To improve API performance, you first need to identify exactly where delays occur. Database queries generally follow a clear sequence: parsing, execution, and data packaging. Bottlenecks can crop up at any of these stages, but diagnosing them in legacy systems can be tricky due to outdated tools that lack modern observability features. Understanding these challenges paves the way for targeted optimizations.

Using Profiling Tools to Analyze Performance

Most major databases come equipped with profiling tools that help uncover internal inefficiencies. For instance:

- PostgreSQL: Use

EXPLAIN ANALYZEto view both estimated and actual execution times, enabling precise query tuning. - MySQL: Activate the slow query log to capture queries taking longer than 500ms, which helps isolate bottlenecks.

- SQL Server: The Execution Plan Viewer in SQL Server Management Studio highlights resource-heavy operations, while Azure SQL users can leverage

sys.query_store_wait_statsto monitor wait times caused by resource constraints, locking, or memory issues.

When profiling, focus on three key metrics: latency (round-trip time), throughput (number of requests processed in a given timeframe), and response time (total duration from request to response). Pay close attention to the ratio of rows scanned versus rows returned. A high ratio often indicates missing indexes, leading to inefficient data retrieval. Implementing proper indexing can significantly reduce query times.

Once you've collected these metrics, the next step is to eliminate redundant queries and optimize joins for better performance.

Spotting N+1 Query Problems and Inefficient Joins

The N+1 query problem is a notorious performance drain in API integrations. It happens when an API retrieves a list of N records and then makes N additional queries to fetch related data for each record. This issue is especially common in GraphQL implementations, where each field resolver runs a separate query, making the problem harder to detect. To spot N+1 issues, look for patterns where a single initial query is followed by dozens - or even hundreds - of additional queries. What should be a single database round-trip can quickly spiral into a major performance issue.

Join operations are another frequent source of inefficiency. To avoid problems, limit joins to three or four tables in a single query; deeper joins demand exponentially more resources. When possible, use INNER JOIN instead of LEFT JOIN, as the former is generally faster when referential integrity is guaranteed. For read-heavy workloads with complex aggregations, consider using materialized views. These pre-compute results during off-peak times, reducing the load during high-traffic periods. Together, these strategies can help you tackle common challenges and improve the performance of legacy databases.

Improving API Performance with Query Tuning and Caching

API Performance Optimization Techniques and Their Impact on Response Times

Once bottlenecks are identified, fine-tuning queries and implementing caching strategies can significantly improve API response times. Here’s how to address these challenges effectively.

Fixing N+1 Queries with Eager Loading

The N+1 query problem occurs when an application makes one query to fetch a list of items and then additional queries for related data, one for each item. Eager loading solves this by retrieving all necessary data in a single database trip. Techniques like JOINs or batching (e.g., WHERE id IN (...)) can consolidate multiple queries into one.

For instance, a Laravel API improved its response time from 27.43 seconds to just 95.72ms by slashing over 4,400 queries down to 10 using eager loading and indexing.

Most modern ORMs provide built-in support for eager loading. In Laravel, you can use the with() method to preload relationships, while Django offers select_related() to efficiently load related data. The goal is to retrieve all required data upfront, avoiding repetitive queries during iteration.

Using Indexes and Filters to Reduce Query Overhead

Strategic use of indexes can reduce query execution time by as much as 70–85%. Index columns involved in WHERE, JOIN, and ORDER BY clauses to speed up lookups. Composite indexes are particularly useful for filtering on multiple columns. Tools like EXPLAIN ANALYZE can help identify which queries would benefit most from indexing.

In addition to indexing, query projection - selecting only the columns you need instead of using SELECT * - can significantly cut down on the data processed and transferred, especially when dealing with legacy tables containing numerous columns.

Prepared statements also play a role by precompiling queries, which reduces parsing time and boosts security. Another effective tactic is batching multiple reads or writes into a single transaction, which can improve response times by as much as 45%.

These optimizations lay the groundwork for even greater performance improvements through caching and connection pooling.

Adding Caching and Connection Pooling

Caching can dramatically reduce database load, particularly for read-heavy APIs. Tools like Redis or Memcached store frequently accessed query results in memory, eliminating redundant database calls for stable data like user profiles or reference tables. To keep the cache accurate, implement invalidation logic to refresh entries whenever underlying data changes (e.g., after POST or PUT requests).

Connection pooling, on the other hand, minimizes the overhead of creating new TCP/TLS connections for every API request. By reusing persistent connections, transaction times can drop by as much as 72% - for example, from 427ms to 118ms in high-concurrency scenarios. In one case, reusing connections in Django sped up API response times by 8–9×.

Specialized tools like HikariCP for Java or PgBouncer and ProxySQL for PostgreSQL and MySQL can help manage connection pooling efficiently. In serverless setups, reusing database clients across invocations can prevent overloading the connection pool.

Here’s a summary of key optimization techniques and their advantages:

| Optimization Technique | Primary Benefit | Best For |

|---|---|---|

| Eager Loading | Prevents N+1 query overhead | Handling relationships (e.g., Orders with Customers) |

| Composite Indexing | Speeds up multi-column filtering | Queries with multiple WHERE conditions |

| Connection Pooling | Reduces latency in establishing connections | High-concurrency environments |

| Query Projection | Cuts bandwidth and memory usage | APIs returning specific data fields |

| Horizontal Partitioning | Improves query performance on large datasets | Time-series data or massive tables |

Combining these techniques often yields the best results. For example, pairing eager loading with caching can optimize both initial data retrieval and subsequent requests, while connection pooling ensures your system can handle traffic spikes without breaking a sweat.

sbb-itb-d4116c7

Using Adalo and DreamFactory for Legacy Database Integration

Using DreamFactory to Create REST APIs for Legacy Systems

DreamFactory simplifies the process of modernizing legacy systems by transforming outdated database schemas into fully documented REST APIs. It automatically generates standard endpoints - GET, POST, PUT, and DELETE - making data access more streamlined and improving query performance.

Its smart handling of relationships, including joins and subqueries, eliminates the inefficiencies caused by N+1 query issues. Features like connection pooling, filtering, and field projection help reduce the load on resource-heavy systems, ensuring smoother performance.

"Legacy databases are often so difficult to work with because they are impenetrable at first glance, with no easy way of extracting the information inside. APIs change all that by putting a kinder, gentler, more familiar face on your legacy systems." - Terence Bennett, CEO, DreamFactory

Testing on a $15 Digital Ocean Droplet with 10 MySQL requests per second demonstrated DreamFactory's efficiency: caching cut response times from 2,524 ms to just 101 ms - a 96% improvement. Additionally, companies can save an average of $45,719 per API by streamlining deployment and management.

DreamFactory offers a 14-day free trial and supports native connectors for databases like MS SQL Server, Oracle, IBM DB2, and PostgreSQL. It also includes essential features like role-based access control, SSO, JWT, and encryption, making it a robust solution for API management.

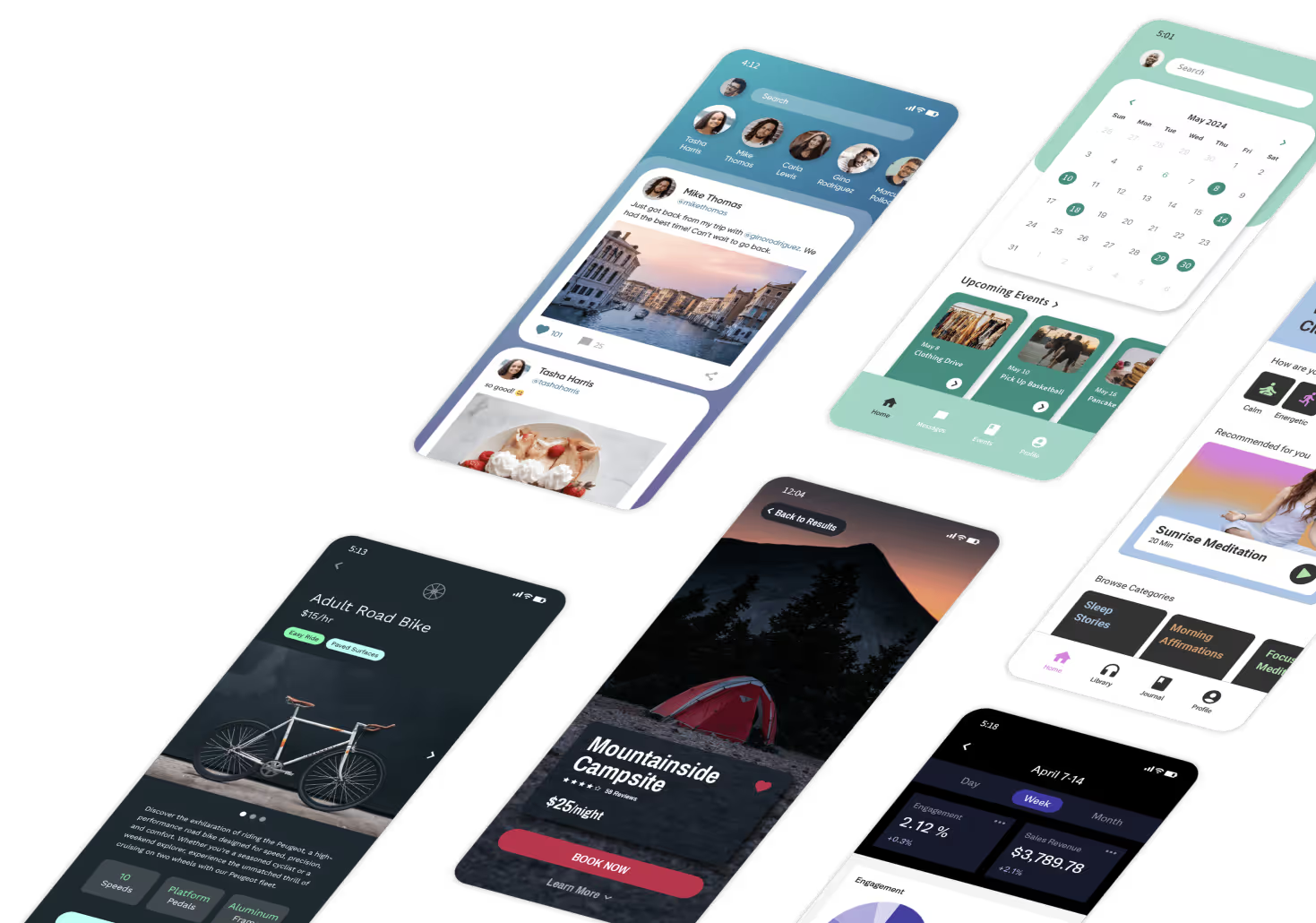

Connecting Frontend Apps with Adalo

Adalo complements DreamFactory's API layer by providing the tools to build modern user interfaces for legacy data systems. By connecting directly to DreamFactory-generated APIs, Adalo enables developers to create mobile and web applications without needing to overhaul the backend.

With its single-codebase approach, Adalo allows simultaneous deployment to web, iOS, and Android platforms, ensuring that updates are instantly reflected across all versions. For enterprise users, Adalo Blue (blue.adalo.com) adds advanced features like SSO, enterprise-grade permissions, and seamless integration with systems that lack APIs - thanks to DreamFactory.

For instance, the National Institutes of Health modernized its grant application analytics by connecting SQL databases through DreamFactory APIs. Similarly, a major US energy company overcame integration delays between Snowflake and legacy systems using this approach.

To optimize performance, offload logic to the API layer. DreamFactory's query parameters - such as ?fields, ?related, ?limit, and ?offset - let you fetch only the data you need, retrieve nested information with a single join, and paginate results efficiently. This reduces serialization time and eliminates the need for multiple sequential calls.

This integration strategy tackles a widespread issue: 90% of IT decision-makers say legacy systems hinder their adoption of digital tools, and 88% of digital transformation leaders have seen projects fail due to legacy database challenges. By wrapping legacy databases in REST APIs and connecting them to modern app builders like Adalo, teams can create updated user-facing applications in just days or weeks - without altering the underlying infrastructure.

Conclusion: Key Strategies for Faster API Response Times

To boost API performance, focus on connection pooling, fixing N+1 query issues, strategic indexing, and caching. These techniques tackle connection handling, query efficiency, and data retrieval speed. Start with connection pooling, which can cut transaction times by as much as 72%. Then, address N+1 query problems using eager loading or batching, which can slash response times by 45%. Implementing strategic indexing can reduce query times by 70–85% without altering your codebase.

Caching is another game-changer. Tools like Redis or Memcached can ease database load by 70–90% for read-heavy APIs. However, effective caching requires robust invalidation strategies to balance speed with data consistency. Cody Lord from DreamFactory puts it best:

"The fastest query is the one you don't run".

Beyond these steps, ongoing tuning is essential. As data grows, database optimizers may behave differently, so regularly reviewing execution plans with tools like EXPLAIN ANALYZE is crucial. Aim for a buffer pool hit ratio above 90% to avoid delays caused by disk reads.

For teams working with older systems, combining DreamFactory's REST API generation with Adalo's app-building tools offers a practical solution. By wrapping legacy databases in REST APIs and connecting them to modern interfaces, you can roll out updated applications in a fraction of the time - think days or weeks instead of months.

FAQs

How can I detect and resolve N+1 query issues in legacy databases?

To tackle N+1 query problems in legacy databases, begin by examining your database queries with tools like EXPLAIN ANALYZE or equivalent profiling utilities. These tools are essential for identifying patterns where excessive queries are being executed for each individual record.

After pinpointing the issue, you can improve query efficiency by implementing strategies like eager loading, joins, or batching. These approaches help consolidate queries, cutting down on redundancy and boosting performance. Fixing N+1 problems not only speeds up API response times but also ensures more efficient data retrieval from your legacy systems.

How do caching and connection pooling improve API performance?

Caching and connection pooling are two effective ways to boost API performance.

Caching works by storing frequently accessed data, so the system doesn’t have to query the database repeatedly. This approach cuts down on response times, reduces latency, and makes handling traffic surges much easier.

Connection pooling, on the other hand, optimizes database interactions by reusing established connections instead of creating a new one for every request. This lowers overhead, allows more requests to be processed at the same time, and helps servers run more efficiently, even during peak usage.

How can DreamFactory simplify integrating legacy databases with modern applications?

DreamFactory makes it easier to connect legacy databases with modern applications by automatically creating secure REST APIs for various database types. It boosts performance with tools like database indexing, caching, and connection pooling, ensuring data retrieval is both fast and efficient.

On top of that, DreamFactory includes transaction management to keep data operations consistent and reliable. This means you can modernize and integrate older systems with new applications without sacrificing speed or security.

Related Blog Posts