Stress testing ensures your no-code app can handle extreme conditions like traffic surges or heavy usage. It identifies weak points, verifies auto-scaling, and tests recovery mechanisms. Unlike load testing, which checks performance under normal peak loads, stress testing pushes your app beyond capacity to reveal breaking points.

Key Takeaways:

- Why Stress Test? To prevent crashes during high-demand events (e.g., product launches, viral campaigns).

- What to Test: Backend (server response, database queries) and frontend (load times, user experience).

- How to Prepare: Simulate real-world conditions with realistic datasets, user flows, and network environments.

- Tools to Use: Combine protocol-based tools (e.g., JMeter, k6) for backend and browser-based tools (e.g., Artillery) for frontend.

- Metrics to Monitor: Response time, error rates, and resource usage (CPU, memory).

Testing early and regularly is critical, particularly before major updates or high-traffic periods. Automate tests, document results, and refine your app's design to improve scalability and performance under pressure.

Stress-Testing Laravel App Performance with k6 and Http Client

What is Stress Testing for No-Code Apps

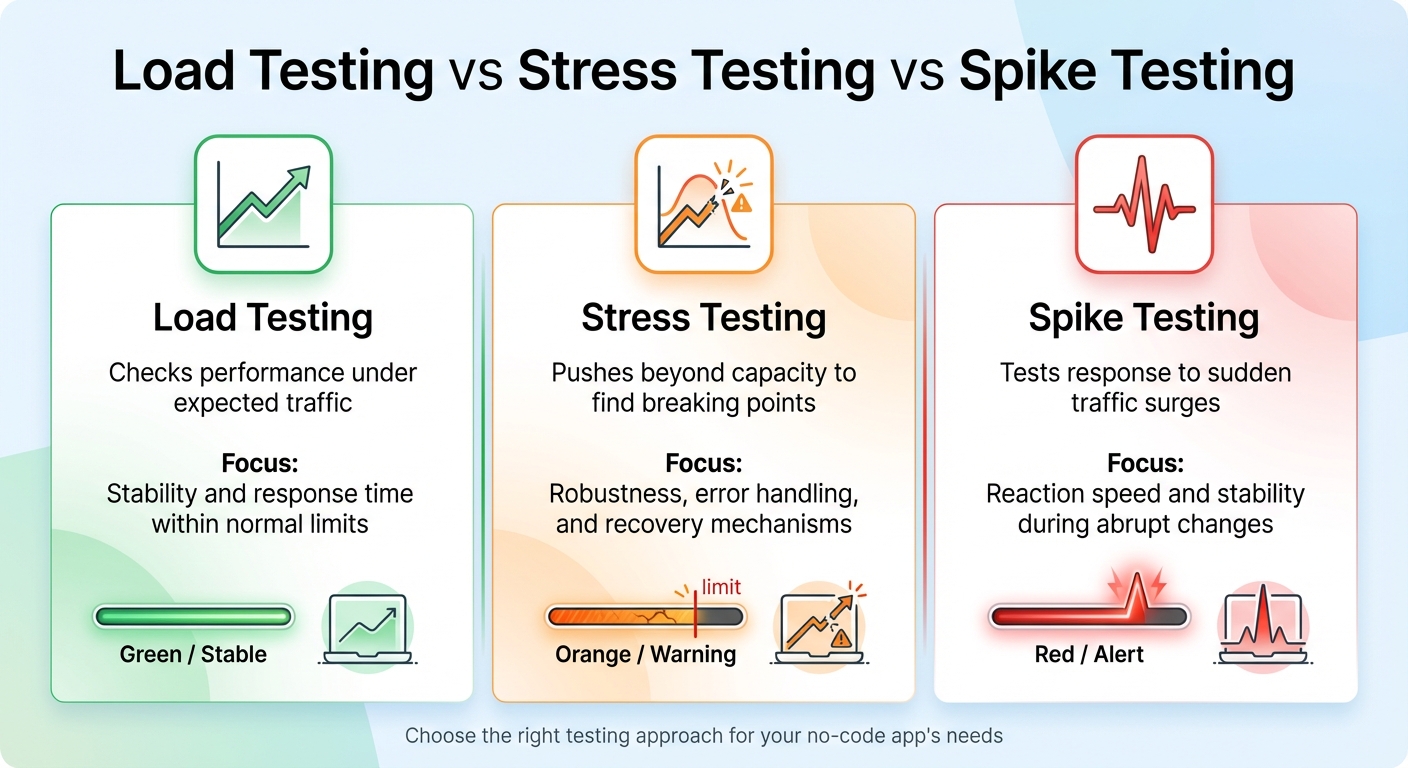

Comparison of Load Testing vs Stress Testing vs Spike Testing for No-Code Apps

Stress testing pushes your app beyond its normal limits to uncover breaking points and test how well it recovers. It’s a way to measure performance when demands exceed capacity, making it a crucial part of improving no-code app reliability. Unlike load testing, which checks performance under expected peak conditions, stress testing intentionally overloads the system to provoke failures. A related method, spike testing, focuses on sudden traffic surges - think flash sales or viral social media moments - to evaluate how quickly the app responds.

"Stress testing is an essential aspect of the software development lifecycle to ensure that applications can withstand high levels of real-world demand and extreme workloads." – AppMaster Glossary

For no-code apps, stress testing aims to pinpoint bottlenecks - issues like database contention or memory leaks - while verifying that auto-scaling features work as intended. It also ensures the app degrades gracefully under pressure, rather than crashing entirely. This process examines the entire app ecosystem, from database queries and screen logic to third-party integrations, to see how they hold up under stress.

| Testing Type | Purpose | Focus |

|---|---|---|

| Load Testing | Checks performance under expected traffic | Stability and response time within normal limits |

| Stress Testing | Pushes beyond capacity to find breaking points | Robustness, error handling, and recovery mechanisms |

| Spike Testing | Tests response to sudden traffic surges | Reaction speed and stability during abrupt changes |

Now let’s dive into how the architecture of no-code platforms influences your stress testing approach.

How No-Code Architecture Affects Stress Testing

No-code platforms work differently than traditional development environments, offering pre-built components and hosted backends. Your app isn’t just custom code - it’s a combination of database queries, visual elements, logic layers, and external API calls, all running on a managed infrastructure.

This setup introduces unique challenges. For example, different platforms handle data differently: iOS, Android, and PWAs rely on distinct rendering engines. External APIs, like Google Maps, come with their own limitations. And if your platform’s servers are based in the US, international users might face higher latency during heavy traffic.

While no-code platforms speed up development, they also limit how much you can tweak behind the scenes. You can’t fine-tune database queries or server settings like you would in a custom-coded app. Stress testing becomes your way to understand how the platform’s infrastructure performs under pressure. It can also highlight areas where you might need to adjust your app’s design - like simplifying overly complex logic or reducing unnecessary API calls.

Knowing these platform-specific factors helps you identify the best times and methods for stress testing.

When You Need to Stress Test Your App

Stress testing is critical before moments of high demand. Whether it’s a product launch, a viral marketing campaign, or a seasonal rush like Black Friday, these events call for rigorous testing.

It’s also important after making significant changes to your app. New integrations, redesigned workflows, or added features can introduce new bottlenecks. For example, integrating external services like payment processors or inventory systems might expose your app to issues if those services experience outages or delays.

If your app has unpredictable usage patterns, regular stress testing is a smart move. For instance, a fitness app that suddenly gains popularity or a B2B tool experiencing a surge in new users should be prepared for unexpected spikes. Testing helps ensure your app can handle these scenarios, keeping the user experience smooth and reliable.

How to Prepare for Stress Testing

Getting ready for stress testing means setting clear objectives, creating a realistic testing environment, and identifying all your system's dependencies.

Set Your Testing Goals and Metrics

Start by defining what "failure" looks like for your app. Stress testing is all about understanding how your app behaves when pushed beyond normal limits. For instance, you might set a requirement that completing a "place order" action should take no more than 2 seconds.

Break your metrics into two categories: backend and frontend. Backend metrics focus on things like server response times and asset processing. Frontend metrics, on the other hand, measure the complete user experience - how long it takes for the interface to load and become usable. You should also establish acceptable error rates, aiming for less than 0.5% under normal conditions and below 1% during peak loads. Additionally, set resource utilization limits, such as keeping CPU usage under 70% to leave room for unexpected traffic spikes.

Once your goals are clear, you’re ready to build a testing environment that mimics real-world conditions.

Create Your Testing Environment

To uncover performance issues, your test environment must closely match your production setup. Use the same hardware specifications - CPU, memory, and disk space - and ensure software versions and configurations are identical. If your production database contains millions of records, your test environment should also include large, anonymized datasets to reveal problems like query delays or database contention.

Design your tests around actual user behavior instead of repetitive actions. Map out how users interact with your app - browsing products, adding items to a cart, and completing checkout - and create test scenarios based on these flows. Add randomized delays, known as "think time", to simulate natural pauses in user activity. A hybrid testing approach can be helpful here: use protocol-based tools to generate heavy backend loads while running a smaller number of browser-based tests to capture the user experience. Don’t forget to simulate real network conditions, like latency or bandwidth limitations, especially if your servers are in the U.S. but serve global users.

Map Your Infrastructure Dependencies

Understanding your system’s dependencies is key to spotting bottlenecks. Every database query and complex operation can impact performance. Create a visual map of your system, highlighting all components - such as APIs, webhooks, databases, and third-party services - to see how data flows through your app.

For example, a no-code SaaS backend tested in July 2025 saw its average response times jump from 9.62 seconds to 24.45 seconds under heavy stress.

Pay attention to middleware rate limits and reliability. Also, remember that different devices and browsers - whether iOS, Android, or progressive web apps - process data in unique ways, which can affect how users perceive performance.

How to Run Stress Tests

Once your preparation is complete, it's time to dive into running your stress tests. This involves choosing the right tools, setting up realistic test parameters, and keeping a close eye on your app's performance as the test unfolds.

Pick Your Stress Testing Tools

With your testing environment ready, the next step is selecting tools that fit your app's needs and your team's skill set. For apps that rely heavily on backend interactions, protocol-based tools like JMeter, k6, and Locust are excellent options. These tools simulate server traffic using HTTP/S requests and can handle scenarios involving hundreds of thousands of users. If your team is experienced in JavaScript, k6 is a great choice, especially with its free tier through Grafana Cloud. For Python enthusiasts, Locust is a natural fit, while JMeter provides a GUI for those who prefer a visual interface.

However, protocol-based tools don't cover everything. They skip over browser-level interactions like rendering, JavaScript execution, and how the app visually responds to user actions. That's where browser-based tools come into play. Tools such as Loadster Browser Bots and Artillery (integrating Playwright) simulate real user actions by running headless browsers. Keep in mind, though, that these tools are resource-intensive. For example, in 2024, the team at Code Wizards used Artillery with AWS serverless infrastructure to simulate two million concurrent players for Heroic Labs' Nakama platform.

For no-code apps, a combined approach works best. Use protocol-level scripts to generate most of the load on your backend while running a smaller set of browser-based tests to check the frontend experience. Adalo users, in particular, can utilize built-in performance monitoring tools like the X-Ray tool to catch performance issues before they impact users.

Start small with a smoke test. This involves running a minimal load - fewer than five virtual users (VUs) - for just a few minutes to ensure your setup and scripts are functioning properly before scaling up. Also, avoid running tests on third-party services like Google Analytics unless you have explicit permission, as this could violate their terms of service.

Configure Your Test Parameters

The key to meaningful results lies in setting realistic test parameters. Determine the number of virtual users (VUs) to simulate, the duration of the test, and how traffic will scale up and down. Most stress tests last between 5 and 60 minutes to uncover peak load issues.

A three-stage traffic pattern works well: gradually ramp up the load, maintain a steady peak, and then ramp down. For stress tests, aim to exceed your app's typical load by 50% to 100% or more, depending on your risk tolerance. For example, if your app typically handles 1,000 concurrent users, test it with loads exceeding 2,000 users to see how it holds up.

Make sure your scripts can handle dynamic values instead of relying on hard-coded data. Many no-code platforms generate dynamic values for each session, so your scripts need to adapt to these changes.

Monitor Performance During Tests

Once your test parameters are set, shift your focus to real-time monitoring. Use live dashboards to track performance and identify potential issues as they arise. Pay attention to three core metrics: latency (response time), availability (error rate), and throughput (requests per second).

To get a complete picture, integrate your load testing tool with backend monitoring systems like Datadog or CloudWatch. These tools can reveal how server-side components respond to traffic spikes. Keep an eye on resource usage - CPU, memory, disk I/O, and network activity. For instance, if CPU usage consistently exceeds 90%, it might be time to consider scaling or optimizing your code. Separately monitor frontend and backend metrics to pinpoint where issues originate.

Set up automated thresholds to immediately flag or fail tests if performance falls below your Service Level Objectives (SLOs). For example, you might require that 95% of requests complete in under 200 milliseconds. Configure your testing tool to save traces, screenshots, and request/response data when errors occur - this can save significant time during troubleshooting. For apps with heavy database usage, track query times and cache hit rates under load to identify bottlenecks early. Effective monitoring not only highlights issues but also guides the next steps in optimizing your app's performance.

sbb-itb-d4116c7

How to Analyze Results and Fix Performance Issues

When reviewing your testing data, focus on three key metrics: response time (including the 95th percentile), throughput (requests per second), and failure rates (percentage of errors or timeouts). The 95th percentile is especially helpful because it gives a clearer picture of what most users experience by excluding extreme outliers.

Compare these metrics to your baseline performance to identify patterns of degradation. For example, if your app typically responds in under 2 seconds but stress testing reveals response times exceeding 5 seconds, you’ve pinpointed a serious issue. Keep in mind that geographic factors can also play a role. If your app's servers are U.S.-based and you're testing users in other regions, higher latency is expected and should be factored into your analysis. These metrics help you zero in on the areas where performance problems are occurring.

Find and Fix Bottlenecks

Performance bottlenecks often arise from calculation overload or inefficient data retrieval. A common issue is real-time calculations, such as counting filtered records every time a screen loads. These tasks can put significant strain on your server, especially as the volume of records grows. Stress tests are invaluable for identifying these kinds of problems.

Pay attention to screens with load times exceeding 3 seconds, slow query runtimes (over 3 seconds), or large payloads (more than 1.6 MB). For example, if you’re using lists without specifying a "Maximum number of items", your app might be fetching thousands of unnecessary records. Always limit list retrieval - for instance, show only the latest 10 products instead of loading your entire catalog. Another potential issue is the auto-refresh feature, which reloads and filters data every 5–10 seconds. During high-traffic periods, this can create avoidable server strain.

Complex app architecture can also lead to slowdowns. Screens overloaded with hidden components, deeply nested data (more than 4 levels), or many-to-many relationships often suffer from rendering delays. Simplifying your architecture can help: break down complex screens into multiple simpler ones, limit nesting depth to 1–3 levels, and avoid overly intricate data structures. The table below highlights when performance metrics become problematic:

| Metric | Healthy Range | Warning | Critical |

|---|---|---|---|

| Initial Load Time | < 2 seconds | > 3 seconds | > 5 seconds |

| Query Runtime | < 1 second | > 3 seconds | > 5 seconds |

| Payload Size | < 1 MB | > 1.6 MB | > 3 MB |

| Nesting Depth | 1–3 levels | 4 levels | > 4 levels |

Improve Your App's Scalability

Once you’ve identified bottlenecks, the next step is making your app more scalable. Start by refactoring your data architecture. For example, store calculated values in dedicated number properties that only update when the underlying data changes. Instead of filtering a list to count active users every time someone opens a dashboard, maintain an "active_user_count" field that increments or decrements as users log in or out. This approach significantly reduces server load on high-traffic screens.

Simplify your data relationships by avoiding many-to-many structures. Instead, store related IDs as text to eliminate the need for complex joins. Additionally, limit the use of auto-refresh to screens where real-time updates are absolutely necessary. Most apps don’t require data to refresh every 5–10 seconds on every screen.

Finally, test your optimizations across all platforms you’re targeting. An optimization that works well on iOS might underperform on Android or the web, and vice versa. As experts often say, "No-code doesn't mean no work". Scalability requires thoughtful design choices rather than relying on the platform to handle growth automatically. After each optimization, run incremental stress tests to measure improvements and ensure your changes address the identified issues effectively.

Stress Testing Best Practices

To ensure your app can handle unexpected challenges, adopting structured and consistent stress testing practices is key. Regular testing not only helps catch performance issues early but also minimizes the risk of costly fixes down the line.

Test Early and Often

Stress testing isn't just for the final stages of development. It should be part of your process during critical moments - before major releases, after infrastructure changes, ahead of peak usage periods, and following bug fixes. Each of these scenarios can impact your app's performance, and testing at these points helps identify issues while they're still manageable.

Catching bottlenecks early is far easier than dealing with them after they've snowballed into larger architectural problems. Addressing these issues during the initial stages saves time and effort compared to fixing fundamental flaws later.

Automate Your Stress Tests

Integrating automated stress tests into your CI/CD pipeline is essential for keeping up with modern development speeds. Manual testing simply can't match the pace, and automation ensures every update is vetted for stability before it reaches users.

"Automate stress tests to run regularly as part of your deployment pipeline. Early detection of performance regressions prevents costly rollbacks." - GoReplay

Set clear performance benchmarks, such as response times under 2 seconds for critical tasks, error rates below 1% during peak usage, and CPU utilization under 70%. Use automated tools to enforce these targets, eliminating the need for manual review of each test report. For realistic testing, avoid running stress tests from local machines or CI/CD nodes - opt for cloud-based distributed testing to simulate real-world conditions effectively.

This automation supports your broader strategy by ensuring every update undergoes rigorous performance checks.

Document Your Test Results

A centralized repository for all test specifications, configurations, and results is a game-changer. This approach simplifies code reuse and allows teams to track progress over time. Include logs, screenshots, and metrics in your documentation to identify failures and trends more effectively.

Automating the documentation process can also be a huge time-saver. Configure your testing platform to log tickets in tools like Jira or Azure DevOps whenever failures occur. Include all relevant environment data and reproducible steps. This creates a clear audit trail, ensuring accountability and helping teams analyze how changes impact performance. Keep tabs on key metrics like response times, throughput, error rates, CPU/memory usage, and transaction success rates. These records are invaluable for troubleshooting and demonstrating the success of optimizations down the road.

| Test Type | Duration | Primary Goal | Issues Uncovered |

|---|---|---|---|

| Spike (Flash) Test | < 30 minutes | Test response to bursts | Autoscaling lag, startup time issues, CPU bottlenecks |

| Soak (Endurance) Test | 6–24 hours | Test long-term stability | Memory leaks, resource saturation, unclosed connections |

| Baseline Test | Ongoing | Establish reference points | Performance regressions, capacity planning needs |

Conclusion

Stress testing plays a crucial role in ensuring no-code apps can handle growth and unpredictable surges in user activity. Every app has a limit where performance begins to falter under heavy load. The apps that thrive during high-demand moments are the ones whose breaking points have been identified and addressed long before real users encounter them.

No-code platforms rely on a mix of components - databases, APIs, and third-party services - all of which need to function seamlessly under pressure. This guide has outlined how stress testing helps pinpoint weak spots, confirm that auto-scaling systems kick in as expected, and provide data for smarter capacity planning.

To build reliability, start testing early and often. Use automated tools, simulate realistic user behavior, and keep detailed records of your findings. Begin with baseline tests to measure normal performance, implement spike tests ahead of major campaigns, and run soak tests to catch gradual issues like memory leaks. These approaches ensure your app remains dependable as your user base expands.

"Stress testing helps teams uncover how software behaves when pushed beyond normal capacity, revealing weaknesses before they affect users." - BrowserStack

FAQs

What’s the difference between stress testing and load testing for no-code apps?

Stress testing takes your app to its limits, pushing it beyond normal operations to find its breaking point and assess how well it bounces back from failure. This test mimics extreme scenarios, like sudden surges in users or unexpected spikes in resource usage.

In contrast, load testing measures how your app performs under typical traffic conditions. It checks for stability, responsiveness, and adherence to performance benchmarks during everyday use.

Both are crucial for ensuring your no-code app can handle what’s thrown at it. Stress testing zeroes in on how it holds up under pressure, while load testing ensures it stays dependable during regular operation.

How do I prepare my no-code app for stress testing?

To get your no-code app ready for stress testing, start by fine-tuning its performance to handle heavy traffic. This means optimizing database queries, implementing caching wherever possible, and cutting down on unnecessary data loads. These steps will help your app run smoothly, even when demand spikes.

Start testing early in the development process, using scenarios that mimic real-world usage. Set clear performance benchmarks - like response times, throughput, and error rates - so you can spot potential weak points. Simulating high-stress conditions will give you the chance to fix issues before they become bigger problems.

By pairing performance tweaks with realistic stress tests, you can create a no-code app that stays dependable, even under pressure, and delivers a seamless experience for users.

What are the best tools for stress testing the backend and frontend of no-code apps?

To test the limits of both the backend and frontend of no-code apps, a few standout tools can help you get the job done effectively.

For backend testing, Insomnia is a solid choice for analyzing API performance under heavy traffic, while AllStack enables detailed load testing using API collections. These tools ensure your backend can handle the strain of peak usage without breaking a sweat.

On the frontend side, Artillery and Locust are top picks. Artillery allows you to simulate complex load scenarios to gauge your app’s responsiveness, while Locust uses Python-based scripts to replicate real-world user behavior, giving insight into how your app performs under high demand. Using these tools together provides a thorough way to stress test your app and prepare it for intense usage.

Related Blog Posts