Event-driven integration makes legacy systems faster and more efficient by enabling them to communicate asynchronously with modern applications. Instead of waiting for slow responses, systems publish events that others subscribe to, ensuring smoother workflows and better user experiences. Here's why it works:

- Asynchronous Communication: Legacy systems can process tasks in the background without delaying modern apps.

- Decoupled Architecture: Systems operate independently, reducing downtime and failures.

- Scalable and Resilient: Event brokers handle spikes in traffic, retries, and outages seamlessly.

For example, a manufacturer reduced order processing time from 12 seconds to under 1 second by switching to an event-driven model. Tools like Kafka or RabbitMQ act as intermediaries, routing events and ensuring data consistency. Gradual modernization strategies like the Leave-and-Layer and Strangler Fig patterns allow businesses to upgrade systems without major risks. With approaches like Change Data Capture (CDC), even systems without modern APIs can join the event-driven ecosystem. This approach cuts delays, boosts scalability, and improves efficiency - all while keeping legacy systems intact.

AWS re:Invent 2025-Using event-driven architectures to modernize legacy applications at scale-API207

Benefits of Event-Driven Integration

Event-Driven vs Synchronous Integration: Key Differences

How Asynchronous Communication Reduces Downtime

With asynchronous communication, a legacy system can publish an event and immediately move forward without waiting for a reply. During periods of high traffic, event brokers step in to queue events, ensuring they are delivered at a rate the legacy system can handle. If a downstream service goes offline, the broker holds onto the events and delivers them once the service is back online.

"Asynchronous architecture ensures resilience. Event-driven systems handle third-party unavailability gracefully, automatically retrying failed operations and maintaining system stability even when dependencies experience issues." - AWS Architecture Blog

This approach not only minimizes downtime but also improves scalability and fault tolerance, which are explored further below.

Better Scalability and Fault Tolerance

One of the key advantages of event-driven architectures is the ability to scale individual components independently. For instance, during peak times when order volumes surge, you can scale up the order processing service without needing to modify the legacy ERP system. This targeted scaling approach reduces risk while improving efficiency.

Additionally, fault isolation ensures that failures in new services don’t disrupt the legacy systems. A practical example comes from HEINEKEN, which adopted an event-driven architecture in 2021 to connect over 4,500 critical applications related to payments, logistics, and inventory. This shift improved their ability to handle sudden spikes in demand while reducing production interruptions.

Event-Driven vs. Synchronous Integration

When comparing event-driven and synchronous integration, the former stands out in several critical areas, as shown in the table below:

| Feature | Synchronous Integration | Event-Driven Integration |

|---|---|---|

| Downtime Risk | High; a failure in one component can cascade through systems | Low; decoupled components continue functioning independently |

| Scalability | Limited by the slowest component in the chain | High; components can scale independently |

| Fault Tolerance | Poor; all systems must be immediately available | High; retries and deferred execution handle outages |

| Data Consistency | Immediate/Strong consistency | Eventual consistency; state updates over time |

| User Experience | Users experience delays | Users receive immediate confirmation |

A notable example of event-driven success is the Federal Aviation Administration's System Wide Information Management (SWIM) system. By utilizing event brokers, SWIM delivers real-time flight data to airlines and partners across the U.S., eliminating the need for constant database polling and streamlining operations.

Core Components of Event-Driven Integration

Event Producers and Consumers

Event producers play a crucial role in detecting and publishing state changes from legacy systems. Their job is to translate these internal state changes into standardized events, essentially acting as the bridge between older systems and modern architectures. By doing this at the edge of the legacy systems, producers ensure that these systems remain unaware of the specific microservices consuming their data. This separation of concerns avoids unnecessary complexity and keeps the legacy systems focused on their core functions.

On the flip side, event consumers subscribe to these published events and perform specific actions based on them. For instance, a consumer might update a database replica, send notifications, or sync data with a mobile application. One of the standout features of consumers is their independence - if one consumer fails, it doesn’t disrupt the entire system. Instead, they work on an eventual consistency model, ensuring the system remains resilient.

"Event-driven integration turns conventional integration architecture inside-out - from a centralized system with connectivity and transformation in the middle to a distributed event-driven approach, whereby integration occurs at the edge of an event-driven core." - Solace

The beauty of this setup lies in decoupling. For example, a legacy SAP system doesn’t need to know about every microservice that relies on its data. It simply publishes events, leaving an intermediary broker to handle the distribution. This approach can drastically improve system performance - reducing order processing times from 12 seconds to under 1 second - and accelerates development cycles, allowing teams to release updates weekly instead of quarterly.

The next critical component in this architecture is the event broker, which ensures smooth and efficient event routing.

Event Brokers and Messaging Systems

An event broker acts as the middleman between producers and consumers, functioning like a smart traffic controller. Tools like Apache Kafka, RabbitMQ, and Solace PubSub+ are popular choices for this role. The broker’s primary responsibilities include routing events, buffering traffic, and ensuring reliable delivery - even during system outages.

Legacy systems often struggle to handle the demands of modern web-scale traffic. This is where brokers shine. By buffering spikes in traffic and delivering events at a manageable pace, they prevent legacy systems from being overwhelmed. Additionally, if a legacy database goes offline for maintenance, the broker can store events and replay them once the system is back online, ensuring no data is lost.

Brokers also excel at translating protocols. They can convert older formats like SOAP or flat files into modern standards like JSON over REST, using tools such as Kafka Connect or Amazon EventBridge API Destinations. This capability bridges the gap between outdated and modern systems, making integration seamless.

| Component | Role in Legacy Integration | Common Implementation |

|---|---|---|

| Event Producer | Captures changes from legacy systems and publishes them | CDC (Debezium), API Gateways, Polling Jobs |

| Event Broker | Routes, buffers, and persists events for decoupled delivery | Apache Kafka, RabbitMQ, Solace PubSub+ |

| Event Consumer | Receives events to trigger logic or update target systems | Microservices, Webhooks, Sink Connectors |

| Micro-integration | Small, modular component at the edge for transformation | Docker containers, Lambda functions |

Patterns for Modernizing Legacy Systems

Leave-and-Layer Pattern

The Leave-and-Layer pattern focuses on building new capabilities alongside the existing legacy system without altering its core. Instead of diving into legacy code, you integrate new functionality through loose coupling. For example, minimal code can be added to trigger events - like a "New Customer Signup" - to an event broker such as Amazon EventBridge. This broker then intelligently routes these events to new services, all without the legacy system being directly involved.

"The 'leave-and-layer' architectural pattern... enables you to add new capabilities to existing applications without the complexity and risk of traditional modernization approaches." - AWS Blog

This approach is particularly handy when speed is critical or when dealing with outdated or opaque systems. Since the original system remains untouched, there’s minimal risk, and teams can roll out cloud-native enhancements - like mobile APIs or real-time analytics - within weeks instead of months.

A great example comes from a banking institution in 2025. They modernized their COBOL-based lending platform using this pattern. By layering new features on top with AWS Lambda and Amazon DynamoDB, they introduced real-time credit checks. This method cut feature delivery timelines from months to weeks and eliminated the need for COBOL expertise for new functionalities.

The Leave-and-Layer pattern pairs well with event-driven architectures, enabling rapid deployment of modern services while keeping legacy systems intact.

Strangler Fig Pattern

While Leave-and-Layer focuses on extending legacy systems, the Strangler Fig pattern takes a gradual replacement approach. It uses a façade or proxy to intercept and route requests, directing them either to the legacy system or to new microservices. This ensures the migration process remains invisible to end users.

Modern implementations often involve event interception through messaging systems or Change Data Capture. These methods allow updates to flow to new components asynchronously, ensuring the system stays responsive.

One Fortune 500 company demonstrated this pattern’s potential by overhauling its order processing. By shifting from synchronous legacy calls to an asynchronous, strangler-based setup, they reduced processing time from 12 seconds to under 1 second. Additionally, they accelerated feature releases from quarterly to weekly, increasing delivery speed tenfold.

However, this pattern requires a deep understanding of the legacy codebase. Safely extracting functionality often involves managing challenges like database splitting and API versioning. Starting with low-risk workflows, such as order confirmations, can help build confidence before tackling more sensitive areas like financial operations. Introducing an Anti-Corruption Layer can also prevent old design flaws from affecting the new system.

Here’s a quick comparison of these two strategies:

| Feature | Leave-and-Layer Pattern | Strangler Fig Pattern |

|---|---|---|

| Primary Goal | Add new capabilities/extensions quickly | Gradually replace and decommission legacy systems |

| Legacy Impact | Leaves core system unchanged | Extracts and replaces functionality piece by piece |

| Risk Level | Very low; no risk to existing functionality | Moderate; requires deep knowledge to extract code |

| Speed to Value | Very fast (single sprints) | Incremental (months to years) |

sbb-itb-d4116c7

Implementation Strategies

Using Change Data Capture (CDC)

Change Data Capture (CDC) offers a way to integrate legacy systems into real-time event streaming without altering the existing application code. By monitoring transaction logs - like redo logs or binlogs - CDC identifies database changes such as INSERT, UPDATE, and DELETE operations as they happen. This method works directly at the database layer, making it a practical option for connecting older systems to an event-driven architecture. Tools like Debezium (compatible with PostgreSQL, MySQL, SQL Server, and Oracle) and Maxwell (specific to MySQL) read transaction logs with minimal impact on database performance.

"Change data capture (CDC) converts all the changes that occur inside your database into events and publishes them to an event stream."

- Andrew Sellers, Head of Technology Strategy Group at Confluent

Since raw CDC events are typically low-level, they often require a multi-stage pipeline to convert them into actionable business events. For instance, you might aggregate multiple row changes into a single "Order Shipped" event. Another useful strategy is creating a real-time replica of legacy tables to handle complex queries. Stream processing tools can then transform and aggregate CDC messages into clean, consumable business data.

Connecting to Legacy Systems Without APIs

Many older systems were built before REST APIs became standard, so alternative methods are needed when log-based CDC isn’t an option. One such approach is the Transactional Outbox Pattern, which writes events to a dedicated outbox table within the same database transaction as the business logic. A separate process then polls this table and sends events to an event broker, ensuring consistency, albeit with the need for minor changes to the legacy application.

Other techniques include:

- Database triggers: Automatically capture and process changes directly in the database.

- Reverse proxies: Intercept HTTP(S) traffic to extract and redirect data.

- JavaScript injections: Embed scripts into web templates to reroute user actions to modern services.

An Anti-Corruption Layer (ACL) can also be used to translate the legacy system’s internal data into stable, modern formats for downstream services.

Roman Rylko, CTO at Pynest, highlights the importance of respecting legacy systems:

"Integrating with legacy isn't 'old vs. new.' It's gravity. You have a system that has carried the business for years - imperfect, undocumented, but revenue-critical".

For example, a global manufacturer revamped their web store integration with a 20-year-old SAP system. Initially, synchronous SAP calls caused 12-second transaction delays. By using a Strangler Fig pattern with Go and Kafka, the store began processing orders instantly, publishing events asynchronously to a broker. An integration service then updated SAP in the background. This reduced transaction times to under a second and allowed the company to accelerate feature releases from quarterly to weekly, boosting development speed tenfold.

Achieving Eventual Consistency

In an event-driven system, eventual consistency accepts that data synchronization across systems may take time but will ultimately align. The Transactional Outbox Pattern, mentioned earlier, ensures consistency by recording both business data and events in the same transaction. To handle duplicate events, idempotency keys are crucial - they prevent repeated processing from corrupting data. Maintaining an event stream as the single source of truth also provides a way to replay events and restore the correct state when desynchronization occurs.

To manage this effectively:

- Apply business logic to filter and validate events before they are published.

- Use synchronous processes with auditing for critical operations while relying on retries and eventual consistency for less critical ones.

- Build materialized views to create read-optimized projections, avoiding the need for real-time joins on normalized legacy tables.

As Alessandro Confetti and Enrico Piccinin from Thoughtworks explain:

"To move towards an event driven architecture, while continuing to run your legacy - and without having to change a line of code - might sound like a pipe dream, but it's surprisingly simple".

Adalo Blue Integration for Legacy Systems

Legacy Integration Using DreamFactory

Adalo Blue taps into the power of DreamFactory to transform legacy databases into secure REST APIs. This platform works seamlessly with databases like IBM DB2, AS/400, MS SQL Server, and Oracle, generating fully documented APIs much faster than traditional methods. It also updates older SOAP services into RESTful APIs, making them accessible for modern mobile and web applications.

With over 70% of enterprise data locked in legacy systems, automation becomes a challenge without integration tools. DreamFactory solves this by adding robust security features - such as RBAC, OAuth 2.0, and API key management - to legacy data that often lacks these protections. Each API comes with interactive Swagger documentation, simplifying developer onboarding, and supports server-side scripting in Python, PHP, or Node.js for custom logic.

"DreamFactory is far easier to use than our previous API management provider, and significantly less expensive."

- Adam Dunn, Sr. Director, Global Identity Development & Engineering, McKesson

For example, Deloitte utilized DreamFactory to provide executives with real-time access to legacy ERP data through modern dashboards, ensuring secure and efficient data flow for decision-making. Similarly, E.C. Barton & Company connected a legacy ERP system to a modern e-commerce platform, enabling seamless data sharing while safeguarding sensitive customer information.

By enabling legacy data through APIs, Adalo Blue streamlines deployment and delivers modern user experiences without overhauling existing systems.

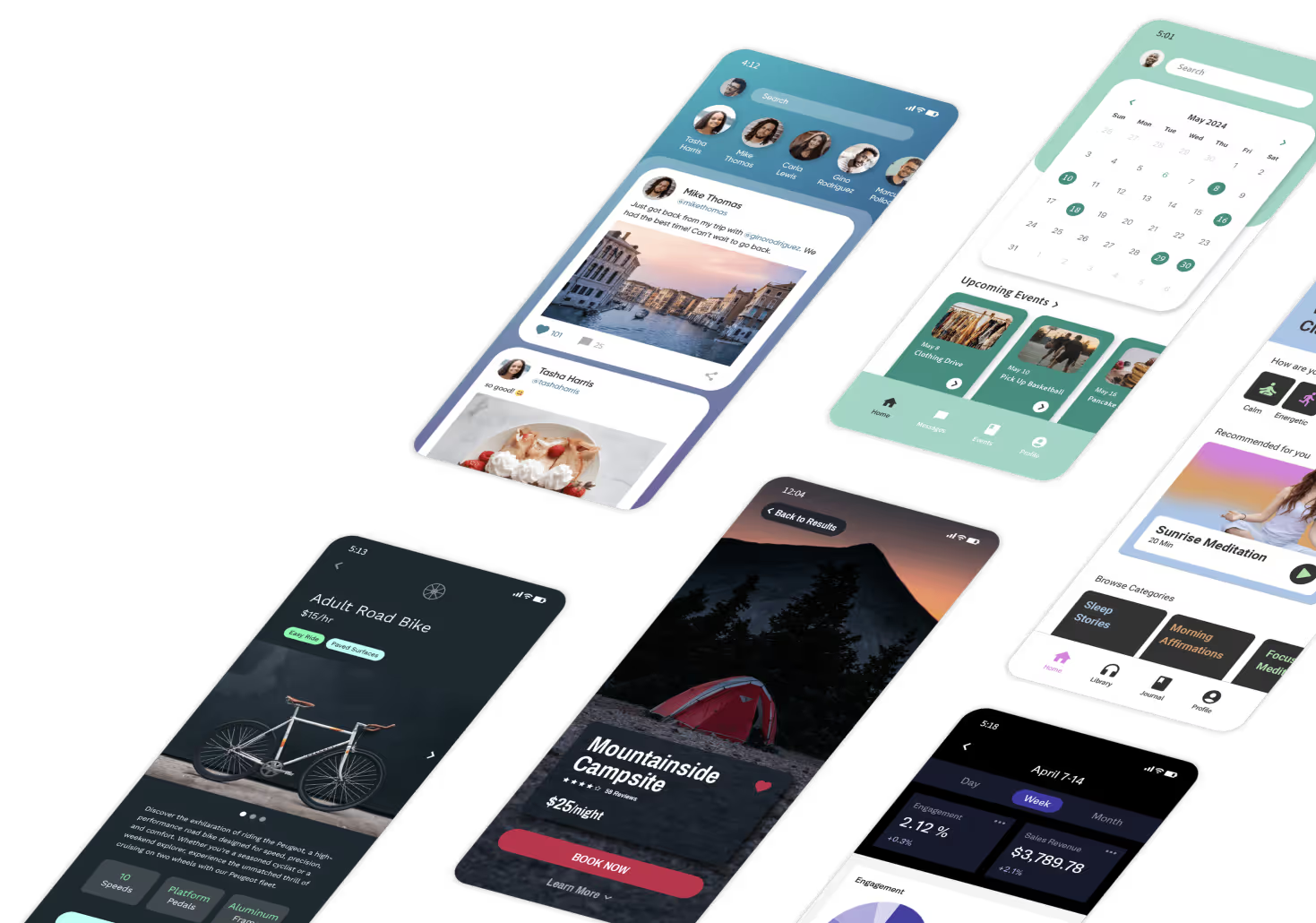

Faster App Deployment with Adalo Blue

Once DreamFactory converts legacy data into APIs, Adalo uses External Collections to access this data in real time. This integration ensures seamless, event-driven data flows between outdated systems and modern applications. Adalo’s AI-assisted builder and single-codebase architecture allow organizations to launch production-ready apps on the Apple App Store and Google Play in just days or weeks - far quicker than the months required for traditional custom development. This approach modernizes systems while maintaining critical operations, eliminating development delays.

Creating APIs through automation typically takes 1 to 3 months, compared to the 12 to 36 months required for re-engineering legacy systems. Automated API generation can save organizations around $45,719 per API, whereas manual API integrations with full security can take up to 34 days to complete. Teams using this approach report development speeds 50% faster than traditional methods, with modernization improving processing speeds by up to 80%. This can reduce response times from 5 seconds to under 1 second.

"Modernization does not require replacement. With Adalo Blue, you keep your systems - and gain the flexibility to build on top of them."

- Adalo Blue

Conclusion

Event-driven integration offers a practical way for organizations to modernize legacy systems without the risks of full-scale overhauls - which fail approximately 83% of the time. By using incremental approaches like the Strangler Fig or Leave-and-Layer patterns, businesses can gradually transition to more efficient systems. Moving from synchronous to asynchronous communication further helps eliminate bottlenecks caused by outdated architectures.

On the technical side, event brokers simplify operations by automatically managing retries and failures, reducing the chance of cascading outages often seen in tightly coupled systems. These mechanisms strengthen the case for adopting event-driven integration.

The results speak for themselves. Organizations using these methods have reported cost savings of 20% to 35% and deployment frequencies up to 973 times higher compared to traditional approaches. Shopify, for instance, cut its continuous integration pipeline time by 60% - from 45 minutes to just 18 - while maintaining zero downtime for over 1 million merchants. Similarly, the Jochen Schweizer mydays Group achieved uninterrupted service during a post-merger system consolidation and improved page load times by 37%.

Adalo Blue takes this a step further by offering automated API generation and a single-codebase builder, enabling production-ready apps in a fraction of the usual time. Developers have reported speeds up to 50% faster than conventional methods, making event-driven integration not just efficient but also highly achievable.

FAQs

How does event-driven integration enhance legacy systems?

Event-driven integration transforms how legacy systems operate by enabling real-time communication between different components. This minimizes delays and boosts responsiveness, creating a more dynamic and efficient system.

One of the key advantages is that it allows systems to work independently while still sharing data seamlessly. This means you can modernize your legacy systems step by step, avoiding the need for a complete overhaul. Plus, adding new features or services becomes simpler and less disruptive, ensuring your existing functionality remains intact while paving the way for easier updates in the future.

What are the advantages of using the Leave-and-Layer pattern to modernize legacy systems?

The Leave-and-Layer pattern provides a practical way to modernize legacy systems with minimal disruption. Instead of overhauling everything at once, this method focuses on incremental updates, which means you can avoid downtime and keep your operations running smoothly.

With the addition of event-driven architecture, you can introduce new features and services that complement your existing systems. This approach lets you integrate modern capabilities without sacrificing the functionality of your legacy applications, creating a smoother path toward more advanced and scalable solutions.

How can legacy systems without modern APIs be integrated using Change Data Capture (CDC)?

Change Data Capture (CDC) bridges the gap between legacy systems and modern applications by tracking database changes and turning them into event streams. It captures real-time insert, update, and delete operations directly from transaction logs - like binlogs or write-ahead logs - and publishes these changes as events. This allows older systems to connect with modern microservices and fit into event-driven architectures.

CDC offers a way to modernize systems step by step without needing a complete overhaul. For instance, combining CDC with the Outbox pattern ensures reliable event delivery, creating a smooth data flow between legacy databases and newer applications. This method enhances scalability, responsiveness, and synchronization while keeping risks and costs under control.

Related Blog Posts