Testing real-time apps is challenging but essential to avoid crashes, lag, or broken interfaces that drive users away. With 71% of app uninstalls caused by crashes and 70% of users abandoning slow-loading apps, identifying and fixing issues early is critical. Here's what you need to know:

- Sync Delays: Updates often lag across platforms due to differences in data processing and network latency.

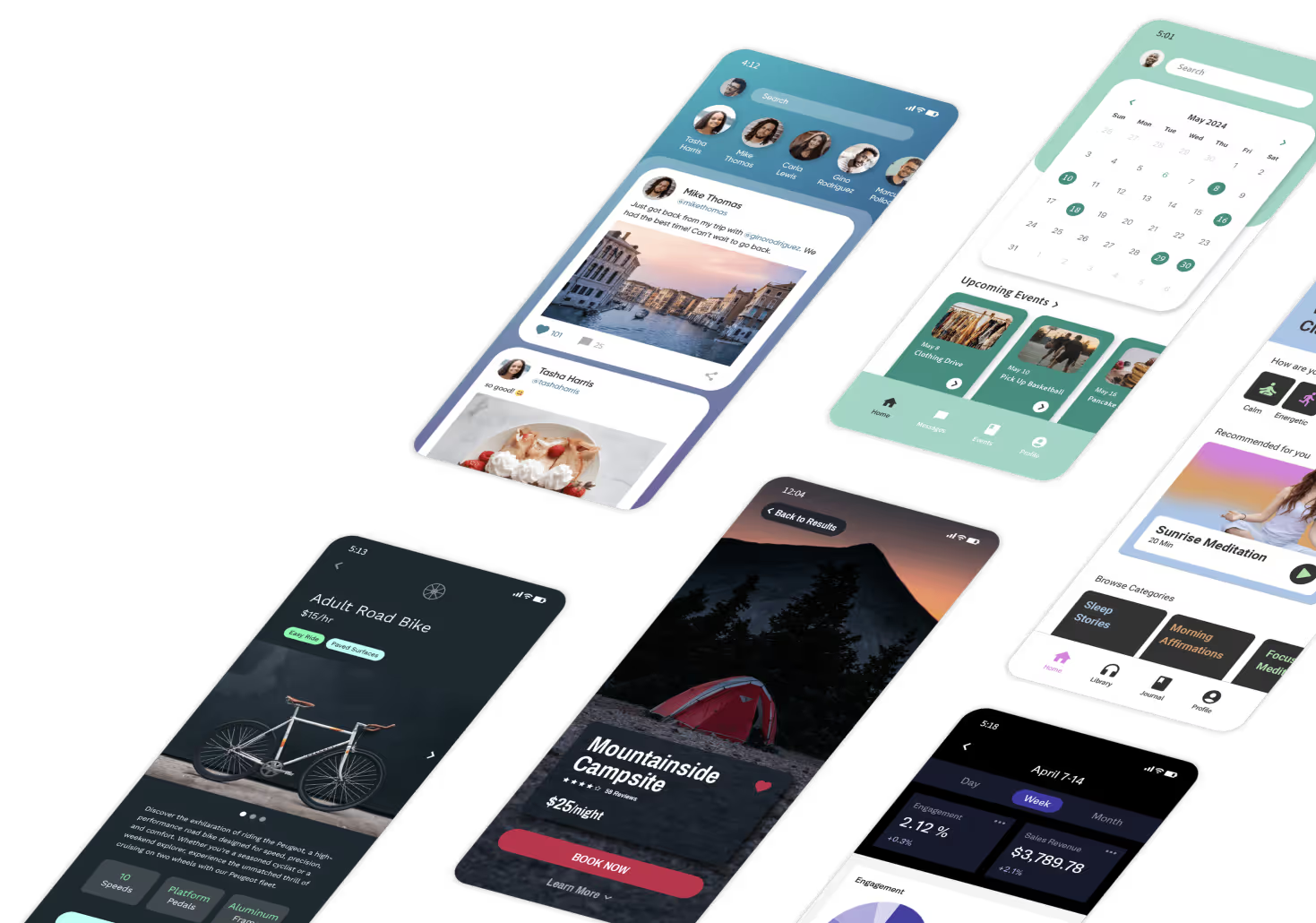

- Device Fragmentation: Apps may break or slow down on devices with varying screen sizes and hardware capabilities.

- Network Variability: Slow or unstable connections can expose hidden performance problems.

- Performance Bottlenecks: Heavy database queries, third-party API calls, and complex components can drag down speed.

- Inconsistent User Experiences: Gestures, notifications, and workflows may behave differently on iOS versus Android.

To address these, simulate user scenarios with network throttling, test on real devices, and optimize database queries. Tools like AI-driven automation and cloud-based testing platforms can significantly improve efficiency, helping you catch issues before they reach users. Platforms like Adalo streamline this process with features like single-build syncing and performance optimization tools, ensuring smoother app performance across platforms.

Real-Time App Testing On Real Devices

Common Problems in Real-Time App Testing

5 Common Real-Time App Testing Issues and Solutions

Testing real-time apps comes with its own set of hurdles. Differences in how platforms process data, varying network conditions, and the range of device specifications can all impact performance. Recognizing these challenges early on helps you address issues before they reach your users. Below, we’ll dive into specific problems like sync delays, device fragmentation, and performance bottlenecks.

Sync Delays Across Platforms

One common issue is the delay in updates appearing across web, iOS, and Android platforms. This happens because each platform processes JSON data differently. Geographic latency can make things worse - testing from Europe or Asia on servers based in the U.S., for example, often results in higher latency.

Performance bottlenecks can compound these delays. Heavy data retrieval, complex calculations, or filtering within lists during screen loads can significantly slow things down. Third-party API calls, like those to Google Maps, might cause additional delays or even fail altogether depending on the platform. Even components that aren’t visible still consume resources, preventing your app from reaching an idle state and leading to perceived sync delays.

To mitigate these issues:

- Optimize database queries by fetching only the essential data, such as the latest 10 records instead of the full dataset.

- Store pre-calculated values in number properties instead of relying on dynamic calculations.

- During automated testing, exclude background tasks like long-polling or web-socket connections that could block execution.

- Always test on physical devices to catch platform-specific rendering issues that web previews might miss.

Device Fragmentation and Responsive Design Problems

The sheer variety of devices - with different screen sizes, operating systems, and hardware capabilities - makes consistent testing a challenge. What looks great on a laptop might break on an iPhone SE or a low-end Android tablet. The "Preview" button in editors often only reflects the web version, meaning components relying on React Native libraries can behave differently on mobile.

Nested components beyond four levels can slow down loading times and disrupt layouts. Additionally, low-end devices may struggle with heavy data loads, while high-end devices might mask performance issues.

To address these challenges:

- Simplify complex screens by splitting them into smaller, more manageable ones.

- Use standard list types (like simple, card, or avatar lists) instead of custom lists to improve performance.

- Remove unnecessary groups and hidden components that add processing weight even when not visible.

- Set limits on database queries to avoid overwhelming low-end devices during previews.

Network Variability and Its Effect on Real-Time Previews

Network conditions can greatly influence app behavior during testing. An app that works flawlessly on office Wi-Fi might struggle or fail entirely on a slower 3G connection or in offline mode. These inconsistencies make it difficult to predict performance in real-world scenarios.

Geographic distance from servers adds another layer of complexity. For example, apps tested locally on U.S.-based servers might perform differently for users in other regions. Interactions with third-party services can also introduce delays based on network quality.

To identify these issues:

- Simulate various network conditions by throttling your connection to 3G speeds or testing offline functionality.

- This approach reveals performance problems that only surface under constrained conditions, helping you optimize for users with less reliable connectivity.

Performance Bottlenecks in Interactive Previews

Interactive previews often lag when apps become too resource-intensive. As the Adalo Help Center explains:

"Every single time your app queries the database... carries out complicated logic... or talks to a third-party network... app performance will suffer."

Heavy database queries, calculations within lists, and hidden components all contribute to sluggish performance. Excessive grouping and deeply nested structures (over four levels) slow things down even further.

To improve performance:

- Pre-calculate values rather than computing them in real-time.

- Compress images and limit dynamic text within lists to reduce data loads.

- Break up overloaded screens into multiple simpler ones to ease processing demands.

- Test on real iOS and Android devices to account for differences in rendering engines and hardware.

Inconsistent User Experiences Across Platforms

Platform differences can lead to inconsistent user experiences. For example, gestures, notifications, and authentication workflows often behave differently on iOS versus Android. An interaction that feels seamless on one platform might feel awkward on another, due to how each operating system handles native features.

Relying solely on web previews won’t catch these discrepancies. Hands-on testing with physical devices is essential to spot subtle differences that impact user experience. Automated tools can help with visual and interaction checks, but manual testing is crucial for ensuring a consistent experience across platforms. Pay close attention to features like swipe gestures, push notifications, and biometric authentication to provide a smooth, unified experience for all users.

How to Improve Real-Time Testing

To enhance real-time testing, it’s essential to address common challenges by leveraging automation, cloud infrastructure, and analytics. These tools not only shorten testing cycles but also help catch issues early. Below, we outline three strategies to boost your testing process.

Using AI and Automation to Find Issues

Automated testing is a game-changer for identifying bugs early in development, ultimately saving both time and resources. AI platforms can analyze over 130 performance indicators, making it easier to detect bottlenecks and regressions quickly.

AI-powered tools like HyperExecute can speed up testing processes by as much as 70%. This kind of efficiency is crucial, especially when you consider that 70% of users abandon apps that load too slowly, and app crashes account for 71% of mobile app uninstalls.

"Automation testing reduces human error and improves the efficiency of the testing process." - TestMu AI

Automation frameworks like Selenium, Cypress, or Playwright are particularly effective for handling repetitive test cases. By monitoring metrics such as response time, throughput, and error rates, teams can identify performance lags early. AI-driven testing also provides continuous monitoring for visual elements, ensuring layout and text consistency across various environments.

Using Cloud-Based Testing Environments

Cloud-based testing platforms offer instant access to thousands of real devices, browsers, and operating system combinations. This eliminates the need to maintain physical hardware, which can be both costly and time-consuming. These platforms also support older versions and adapt quickly to new releases, reducing the risk of platform updates disrupting functionality.

The cost benefits are substantial. Organizations report saving 60–70% on infrastructure expenses compared to running local testing labs. For perspective, maintaining a modest 100-machine on-premise lab can cost nearly $700,000 annually when factoring in power, cooling, facilities, and staffing.

Cloud testing environments also enable parallel execution, allowing multiple tests to run simultaneously across different configurations. This scalability extends to simulating network conditions, such as latency or varying speeds (3G/4G/5G), and even battery levels, ensuring comprehensive testing at scale.

By integrating cloud testing with CI/CD workflows using tools like GitHub Actions or Jenkins, teams can enable continuous testing with immediate feedback on code changes. Splitting large test suites into concurrent processes across cloud containers further reduces test cycle times. Combined with cloud strategies, analyzing user behavior ensures testing efforts align with real-world usage.

Prioritizing Test Cases with Usage Analytics

To tackle performance issues effectively, focus on the features your audience uses most. Usage analytics provide insights into user behavior, enabling teams to design tests that target high-impact areas. For instance, tools like Google Analytics can reveal which mobile devices and operating systems are most common among your users. This is especially helpful when balancing testing scope - testing just 10 devices can cover 50% of the market, but achieving 90% coverage requires testing 159 devices.

"Prioritize understanding user behavior and design test cases around critical scenarios that align with actual usage." - Rohan Singh, HeadSpin

Real-time monitoring of metrics like response times and error rates, along with setting alerts for underperforming features, ensures your testing efforts focus on what truly matters. By zeroing in on critical scenarios, teams can optimize their testing processes and improve user satisfaction.

sbb-itb-d4116c7

How Adalo Handles Real-Time App Testing

Adalo simplifies the challenges of real-time app testing by combining a single-codebase system, AI-driven performance insights, and integrated testing tools. These features work together to address sync delays, uncover performance issues early, and simulate real-world scenarios - all within one platform. Here's how Adalo ensures smooth cross-platform updates and reliable app performance.

Single-Build Sync Across Platforms

With Adalo, you only need to build your app once. Its single-codebase approach simultaneously deploys updates to web, iOS, and Android. Whether you're tweaking the UI, adjusting logic, or modifying the database, changes made in the visual builder are instantly applied across all platforms. This ensures consistency and eliminates the hassle of managing separate builds.

Performance improvements have made apps load up to 11x faster, while reducing app sizes by 25%. For developers navigating a market with over 24,000 Android devices and numerous iOS models, this streamlined process significantly reduces testing efforts while maintaining uniformity. On top of instant syncing, Adalo leverages AI to further optimize performance.

AI-Powered X-Ray for Performance Optimization

Adalo's X-Ray feature scans your app for performance bottlenecks before they impact users. Using AI, it detects issues like slow loading times, memory leaks, and inefficient database queries during interactive previews. It then offers actionable suggestions, such as refactoring components or adding caching strategies. Performance is quantified as a score (0–100), allowing you to track how your changes affect responsiveness.

Backend advancements have brought impressive results: notification delays reduced by 100x, screen load times cut by 86% for datasets with 5,000 records through progressive loading, and database performance improved with automated indexing and optimized count logic. These tools not only address performance but also stabilize tests against UI changes, cutting down on maintenance time. Together, they enable testing that closely mirrors real user behavior.

Integrated Testing Tools for Real Scenarios

Adalo's testing environment is built directly into the platform, allowing you to simulate various scenarios effortlessly. The Preview feature provides instant feedback on your app's logic and design. You can test push notifications between devices, verify authentication flows, and assess compatibility with data sources like Airtable, Google Sheets, and PostgreSQL.

The platform also flags common performance drains, such as excessive API calls, overly nested components, and retrieving unnecessary database records. For example, automated image compression improved loading times by 5x (from 6.32 seconds to 1.15 seconds), and component download speeds for web apps now average 165.92ms, thanks to Amazon's Cloudfront CDN. While final validation should always include testing on actual devices, Adalo's tools catch most issues early - when fixes are faster and less costly to implement.

Conclusion

Real-time testing comes with its fair share of hurdles - think device fragmentation, unpredictable network conditions, and performance hiccups that can drive users away.

Tackling these issues requires smart, efficient solutions. For instance, AI-powered automation can catch errors that manual testing might overlook. Cloud-based environments open the door to thousands of device combinations without the need for costly hardware. Responsive design testing ensures apps work seamlessly across different devices, and prioritizing test cases based on user analytics focuses efforts where they matter most.

Adalo takes these challenges head-on with its single-build architecture, which syncs updates instantly across platforms. Its X-Ray feature leverages AI to flag performance issues early, while built-in testing tools let you simulate key scenarios - like push notifications or authentication flows - right within the platform.

FAQs

How can I reduce sync delays when testing real-time apps across platforms?

To cut down on sync delays during real-time app testing, event-driven synchronization is a smart choice. By treating user actions as events, updates can happen immediately, ensuring smooth syncing when the app reconnects. This method helps reduce lag and keeps data consistent across platforms.

It’s also a good idea to test your app under different network conditions. This can help you spot and fix potential bottlenecks early. Adding offline-first functionality is another way to keep the app running smoothly, even when the connection is unreliable.

How can developers address device fragmentation during app testing?

Device fragmentation can make it tricky to ensure your app works smoothly across all devices and operating systems. A good starting point is to test your app on a variety of devices, screen sizes, and OS versions. This helps you identify and fix compatibility problems early on. Using responsive and adaptive design is also crucial - it allows your app's layout to adjust automatically to different screen dimensions, ensuring it stays user-friendly and visually consistent.

To extend your testing efforts, take advantage of device emulators and simulators. These tools let you evaluate your app's performance across different configurations without needing physical access to every device. By combining physical testing with virtual tools, you can create a more reliable and polished experience for all users.

How can network variability impact app performance during testing?

Network variability can impact app performance in several ways, including slower data transfer, increased latency, and inconsistent responsiveness. These challenges can result in longer load times, delayed interactions, or even failures in data synchronization. Such disruptions can frustrate users and make it difficult to gauge the app's actual performance.

By testing under different network conditions - like slow, unstable, or fluctuating connections - developers gain insight into how their app performs in practical scenarios. Simulating these environments during testing ensures the app remains functional and user-friendly, even when bandwidth is limited. This step is essential for creating an app that delivers a consistent experience, no matter the network quality.

Related Blog Posts