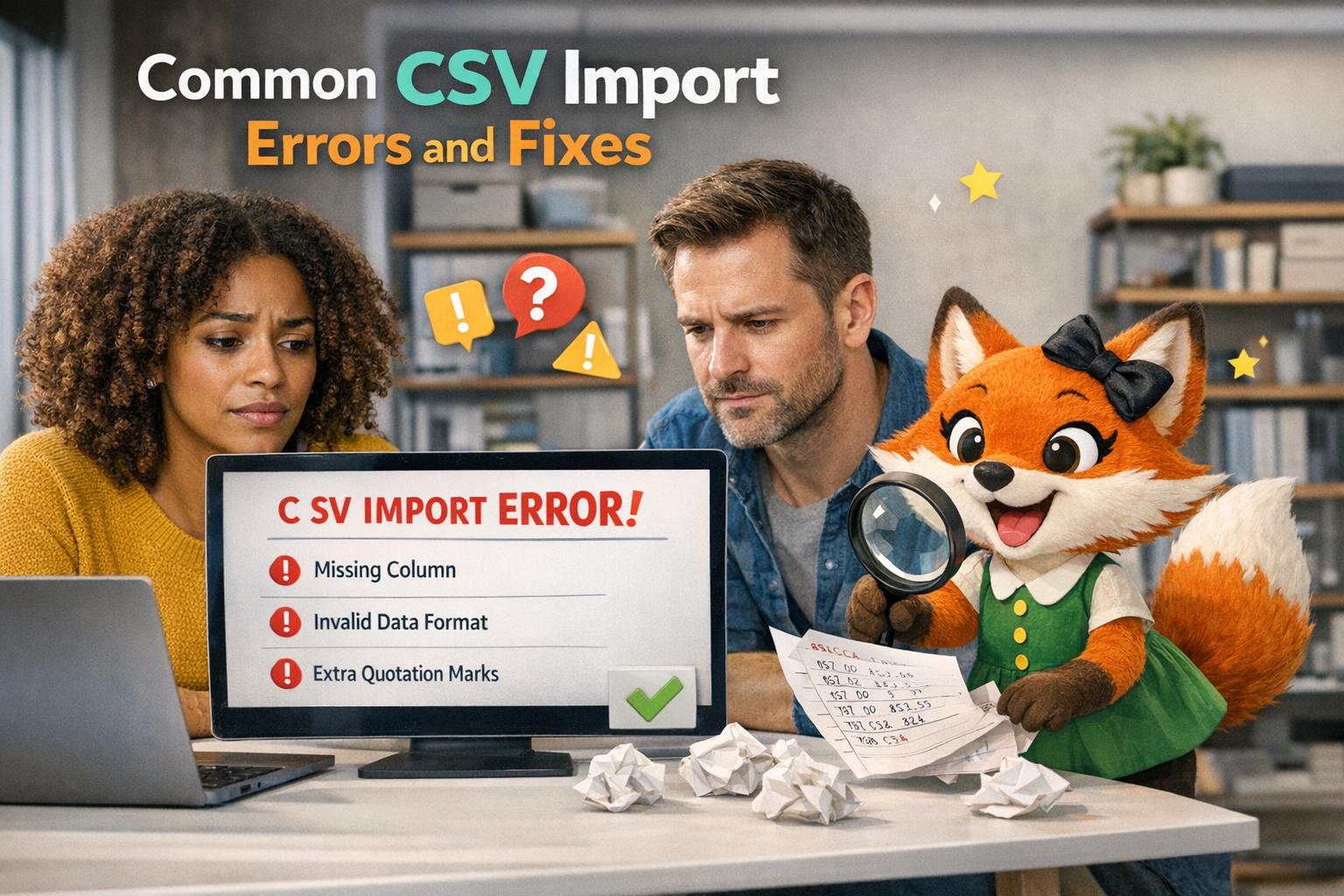

CSV imports are a lifesaver for managing large datasets, but they often fail due to simple errors. Here’s what you need to know to avoid the most common issues:

- File Size Limits: Keep files under 10,000 records for smoother imports. Hidden data in empty rows or columns can inflate file sizes, so clean up your file before uploading.

- Wrong File Formats: Use

.csvfiles with UTF-8 encoding. Formats like.xlsor.txtwill fail unless properly converted. - Header and Column Mismatches: Ensure your column headers match the database fields. Extra spaces, merged cells, or missing headers can cause errors.

- Data Type Errors: Numbers shouldn't include symbols like

$or commas, dates must follow formats likeMM/DD/YYYY, and boolean values should be "true" or blank. - Encoding Issues: Garbled characters (e.g., "é") signal encoding problems. Save your file as UTF-8 or use tools like Google Sheets to re-export it.

Quick Tips:

- Test with 50 records before a full upload.

- Break large datasets into smaller chunks of 10,000 rows or fewer.

- Use plain-text editors to spot hidden issues like unescaped quotes or mixed delimiters.

By following these steps, you’ll avoid 72% of common CSV import errors and ensure a hassle-free upload process.

Common CSV Import Errors and How to Fix Them - Quick Reference Guide

File Size and Format Problems

File Size Exceeds Limits

Oversized CSV files can often prevent successful imports. Adalo advises breaking your data into batches of 10,000 records or fewer to improve the chances of a smooth import process. For context, Google Sheets limits imports to 100MB or 10 million cells, Microsoft Excel allows up to 1,048,576 rows, and Apple Numbers supports up to 1 million rows.

One common culprit behind inflated file sizes is hidden "ghost" data - those seemingly empty rows or columns that still take up space in your file. These can lead to parsing errors if not cleaned up properly.

If your dataset exceeds 10,000 records, it’s better to manually convert Excel files to CSV instead of relying on server-side conversion, which can be slower and prone to errors. Additionally, Adalo’s database mapper lets you reduce processing strain by selecting "None" for any unnecessary CSV columns.

Wrong File Formats

When working with platforms like Adalo, your files must have a .csv extension and use UTF-8 encoding. Uploading files in formats like .txt or .xls/.xlsx without converting them first will cause immediate failures. If you notice garbled characters - often described as "alien hieroglyphics" - it’s a sign that the file was saved with the wrong encoding.

To save your file in the correct format:

- Microsoft Excel (2016 or later): Go to File > Save As and choose CSV UTF-8 (Comma delimited) (*.csv) from the dropdown menu.

- Google Sheets: Click File > Download > Comma Separated Values (.csv) to ensure UTF-8 encoding.

- If the encoding is still incorrect, open the file in a plain-text editor like Notepad or TextEdit, select Save As, and choose UTF-8 encoding.

"Importing data files like CSVs and Excel spreadsheets isn't for the faint of heart. There are plenty of things that can go wrong in the data import process, but luckily, these common errors are easy to fix." – Anne Bonner, Writer, Flatfile

Up next, we’ll dive into how data structure and mapping issues can complicate CSV imports even further.

Data Structure and Mapping Problems

Column and Header Mismatches

CSV uploads can be tricky, especially when your file's headers don't align with your database structure. In fact, over 50% of self-service CSV upload failures stem from this issue. The usual suspects? Missing header rows, extra spaces in column names, or merged cells left over from Excel formatting. Even a Byte Order Mark (BOM) in UTF-8 files can throw off the first column name, causing the import to fail.

Adalo simplifies this process by automatically matching CSV columns to database fields - but you’re not locked into exact name matches. During the import, you can manually assign any CSV column to a database field using dropdown menus. For instance, if your CSV has a column titled "Customer Name" but your database calls it "Full Name", you can easily pair them. Not using a column? Select "None" to skip it. Double-check your mappings to avoid errors.

Relationship fields require extra attention. For one-to-many relationships, the value in your CSV must perfectly match the "First Property" (the label field) of the related record. For example, if you're linking orders to customers and the "First Property" in your customer collection is "Email", your CSV must include the exact email address - not a customer ID or name.

To make things easier, download a sample CSV from your Adalo database view. This gives you a clear template to format your data correctly. Once your headers are aligned, double-check field mappings to prevent further issues.

Field Mapping Problems

Mapping errors can misplace data, like putting text in number fields or leaving required fields blank. Around 72% of failures are due to invalid file structures.

Each property type in Adalo has specific formatting requirements:

- Numbers: No symbols allowed.

- Booleans: Use "true" or leave blank.

- Dates: Stick to MM/DD/YYYY or YYYY-MM-DD formats.

- Date and Time: Use ISO 8601 format, like 2022-07-04T02:00:00Z.

- Location: Enter full, comma-separated addresses.

"CSV is not actually a standardized format... there is always a chance that your file may be a bit 'off' from what your import tool was expecting." – CSV Loader

For large datasets, break your CSV into smaller chunks of 10,000 rows or fewer. Massive imports - like 100,000 records - can take hours to process (one example took about 3 hours). Taking the time to format and map fields correctly will save you headaches and ensure smooth CSV imports with Adalo's tools.

Data Type and Content Problems

Inconsistent Data Types

CSV files store information as plain text, which can lead to conversion errors when mismatched data types appear in specific fields. For instance, if text ends up in a numeric or boolean field, the import process will fail. Common culprits include formatting symbols - like dollar signs in prices, commas in large numbers, or dashes in phone numbers. Adalo's Number properties only accept whole numbers, decimals, or negatives, strictly excluding symbols or letters.

Date fields are equally strict. Writing "July 31st 2020" instead of "07/31/2020" or "2020-07-31" will cause the parser to fail. For date-and-time fields, stick to the ISO 8601 format, such as 2022-07-04T02:00:00Z.

Hidden spaces can also wreak havoc. For example, a cell that looks like "John" might actually contain " John " with invisible spaces, leading to failed relationship matches. Regional formatting differences add another layer of difficulty - using 1.234,56 instead of 1,234.56 for decimals can confuse systems expecting U.S.-style number formats.

To avoid these issues, clean up your data before uploading:

- Remove non-numeric characters and extra spaces from columns.

- Use only "true" or leave cells blank for boolean fields.

- Standardize all dates to MM/DD/YYYY or YYYY-MM-DD.

Beyond mismatched data types, incomplete or invalid values can also disrupt CSV imports.

Missing or Invalid Data

Empty fields in required columns will trigger a "Missing required field" error, halting the import. Sometimes, the issue isn't immediately obvious - data might look complete but contain invalid values that don't match the expected format.

To catch these errors, open your CSV in a plain-text editor like Notepad or TextEdit. Unlike spreadsheet software such as Excel, text editors display raw data, revealing hidden issues like inconsistent delimiters, unclosed quotes, or strange characters (e.g., "é" or random boxes). These often stem from encoding problems that need fixing before the import.

Modern import tools, including Adalo's, provide real-time error feedback. They highlight problematic cells with messages like "Invalid date format" or "Expected a number", making it easier to pinpoint and resolve issues. Adalo's import tool demands precise data inputs, so standardized formats and complete fields are essential. For relationship fields, the value in your CSV must exactly match the "First Property" of the related record. Even minor details like case sensitivity or extra spaces can cause errors.

To ensure your data is ready for import:

- Use the template provided by your target system to align column order and data types correctly.

- For large datasets, import in batches of 10,000 records or fewer. This approach isolates errors and avoids system timeouts.

- Delete any blank rows at the end of your file to prevent "empty column" errors.

| Property Type | Required Format for Adalo CSV Imports | Common Error to Avoid |

|---|---|---|

| Number | Whole, decimal, or negative only | Including currency symbols or letters |

| True/False | "true" or [blank] for false | Using "Yes/No" or "1/0" |

| Date | MM/DD/YYYY or YYYY-MM-DD | Inconsistent formats within the same column |

| Date & Time | ISO 8601 (e.g., 2022-07-04T02:00:00Z) | Using local time strings without UTC offsets |

| Location | Full comma-separated: Street, City, State, ZIP, Country | Missing components like ZIP or Country |

Encoding and Parsing Failures

Wrong Encoding

If your CSV file displays strange characters like "é" instead of "é", you're likely dealing with an encoding issue. This often happens when older encoding formats, such as ASCII or Windows-1252, are used instead of UTF-8, which is the standard for most modern systems.

"If it's missing special characters, or the text/characters look like alien hieroglyphics, this is almost always an encoding problem." - CSV Loader

To fix this, open the file in a text editor like Notepad (Windows) or TextEdit (Mac), select "Save As", and choose UTF-8 as the encoding format. If you're using Microsoft Excel 2016 or later, save the file as "CSV UTF-8 (Comma delimited)." Another quick solution is to upload the file to Google Sheets and download it again as a CSV, which automatically applies UTF-8 encoding.

Sometimes, a UTF-8 BOM (Byte Order Mark) can cause the first column header to display incorrectly. To address this, use an advanced text editor to save the file as "UTF-8 without BOM."

Malformed CSV Structure

Even with proper encoding, structural errors in the CSV file can still cause issues. One common problem is unescaped quotes. For example, if a cell contains text like This has a "quote" inside, the parser might misinterpret the internal quote as the end of the field, leading to misaligned data or an "Unterminated quoted field" error. To resolve this, double the quotes inside the cell: This has a ""quote"" inside.

Mixed delimiters are another frequent culprit. If some rows use commas while others use semicolons, the file might fail to import correctly. Use your text editor's "Find and Replace" feature to standardize all delimiters to commas. Similarly, ensure line breaks are consistent ( or \r).

According to industry research, 72% of CSV import failures are caused by invalid file structures that could be avoided with proper validation. Viewing the file in a plain-text editor can help you spot hidden issues like inconsistent line breaks, unquoted tabs, or extra commas - problems that spreadsheet software might obscure. For a quick fix, import the file into Excel and re-save it as "CSV UTF-8", which often corrects quote escaping and normalizes line breaks automatically.

| Issue Type | Symptom | Fix |

|---|---|---|

| Wrong Encoding | Garbled text (e.g., "é", unexpected symbols) | Re-save as UTF-8 using a text editor or Excel |

| BOM Issue | Misread first column header or odd characters | Save as "UTF-8 without BOM" |

| Unescaped Quotes | Data misalignment; "Unterminated quoted field" error | Replace " with "" within cells |

| Mixed Delimiters | Misaligned data across rows | Standardize all delimiters to commas |

| Line Breaks | Merged rows or incorrect row count | Normalize line breaks to \r |

sbb-itb-d4116c7

Best Practices for Error-Free CSV Imports in Adalo

Preview and Validate Data Before Importing

To avoid common CSV import errors, it's crucial to validate your data before uploading it to Adalo. Start by opening your CSV file in a plain text editor. This allows you to spot potential troublemakers like unescaped quotes, mixed delimiters, or inconsistent line breaks - issues that can disrupt the import process. Did you know that 72% of CSV import failures stem from invalid file structures? A quick check here can save you plenty of headaches later.

Begin by testing your import with just 50 records. This lets you confirm that your formatting and mapping are correct before diving into a full-scale upload. Also, clean up your data - remove any empty rows or columns.

Make sure your data follows Adalo's formatting rules. For example:

- Numbers: Stick to digits, decimals, or negative signs - no dollar signs or commas.

- Dates: Use formats like MM/DD/YYYY or YYYY-MM-DD.

- Date and Time: Follow ISO 8601 format, such as 2022-07-04T02:00:00Z.

- True/False fields: Use the word "true" for true values; leave the cell blank for false.

- Location fields: Include the full format - street, city, state, ZIP, and country.

Once your data is ready, Adalo's mapping tools will help you align it with your database structure.

Use Adalo Tools for Database Mapping

Adalo's mapping tool simplifies the import process by automatically matching your CSV columns to database properties. If the automatic pairing isn’t accurate, you can manually select the correct columns using dropdown menus. You can also exclude unnecessary columns by choosing "None". Don’t worry if your CSV column names don’t match Adalo’s property names or order - they don’t need to.

For relationship fields (One-to-Many), the values in your CSV must exactly match the "first property" (the label field) of the related collection. If you run into mapping issues, double-check that this first property is a text or number field and that your CSV values align perfectly. Keep in mind that Adalo’s importer adds new records rather than overwriting existing ones, so your current data remains safe.

When your data and mapping are set up correctly, even large-scale imports can run smoothly.

Use DreamFactory for Complex Integrations

Adalo’s native CSV importer works well for most standard imports, but when you’re handling datasets larger than 10,000 records or need more processing power, DreamFactory is your go-to tool. This platform is especially useful for managing complex or large-scale imports, allowing you to connect Adalo to external systems like ERPs or legacy databases - even those without APIs.

For teams using Adalo Blue, DreamFactory is invaluable. It enables seamless data integration without the hassle of constantly exporting and importing CSV files. Instead, it connects directly to databases like MS SQL Server and PostgreSQL, keeping your Adalo app in sync with your source data. Just note that processing a large dataset - say, 100,000 records - can take up to 3 hours, depending on the complexity of your columns. With DreamFactory, you can plan and execute these imports efficiently, making even the most challenging data migrations manageable.

Importing .csv files ( avoiding errors by changing the date format)

Conclusion

Importing CSV files into Adalo doesn't have to be a headache. Did you know that 72% of CSV import failures happen because of invalid file structures? To avoid this, it’s essential to know what Adalo requires: files must use UTF-8 encoding, headers should align with Adalo's formatting rules, and data must follow specific guidelines - like numbers without symbols, dates in either MM/DD/YYYY or YYYY-MM-DD formats, and boolean values as "true" or left blank.

When importing, pay close attention to mapping your data. Relationship fields need to match the first property of the related collection exactly. Keep in mind that CSV imports in Adalo are designed to add new records. For larger datasets, break the imports into batches of 10,000 records or fewer. If your dataset exceeds 10,000 records or you need to connect to external databases, tools like DreamFactory can help. However, processing large imports - like 100,000 records - using DreamFactory can take up to 3 hours. This makes staging and planning for large imports even more critical.

Start small. Test your setup with an initial batch of 50 records. Use a plain-text editor to validate your CSV file and rely on Adalo's mapping tools to ensure everything lines up. Whether you're working with a free plan for smaller imports or a paid plan for larger ones, following these steps can save you time and frustration. With accurate data, proper formatting, and smart batching, you can turn CSV imports into a seamless and predictable process.

FAQs

What steps should I take to properly format my CSV file for import?

To prepare your CSV file for a smooth import, start by including a header row that clearly labels each column. Each field should be separated by a comma, and the data within each column should follow a consistent format.

To avoid common pitfalls, enclose any text containing commas, quotes, or line breaks in double quotes. Also, ensure your file is saved with UTF-8 encoding to prevent issues with invalid characters during the import process.

Before uploading, take a moment to check for empty rows or unnecessary spaces, as these can interfere with the import. A quick review can save you time and help avoid potential errors.

What should I do if my CSV import fails due to encoding problems?

If your CSV import isn’t working due to encoding problems, the first step is to verify the file’s character encoding. Make sure it’s set to a supported format like UTF-8, as unsupported encodings or special characters often lead to errors during the import process.

To resolve this, open the CSV file using a text editor or a spreadsheet tool that lets you adjust encoding settings. Then, save or export the file again, ensuring you select UTF-8 encoding. This adjustment typically fixes the issue and allows the import to go through without any hiccups.

What should I do if my dataset exceeds the 10,000 record limit when importing a CSV file?

If your dataset contains more than 10,000 records, break your CSV file into smaller chunks, each with fewer than 10,000 records. After splitting, upload each file individually. This method prevents system constraints from causing issues and ensures a seamless data import process.

Related Blog Posts