Internal tools are essential for streamlining workflows, managing data, and reducing errors. But as businesses grow, these tools often face scaling challenges - slower performance, manual fixes, or even system failures. The solution? Build for scalability from the start. Here’s what you need to know:

- Plan ahead: Define performance needs like user load and peak traffic before development begins.

- Modular design: Break tools into independent components to simplify updates and handle growth.

- Optimize performance: Use server-side processing, caching, and auto-scaling to maintain speed.

- Security first: Implement Role-Based Access Control (RBAC) and Single Sign-On (SSO) for streamlined, secure access.

- Integrate smartly: Connect to databases and legacy systems efficiently, avoiding data silos.

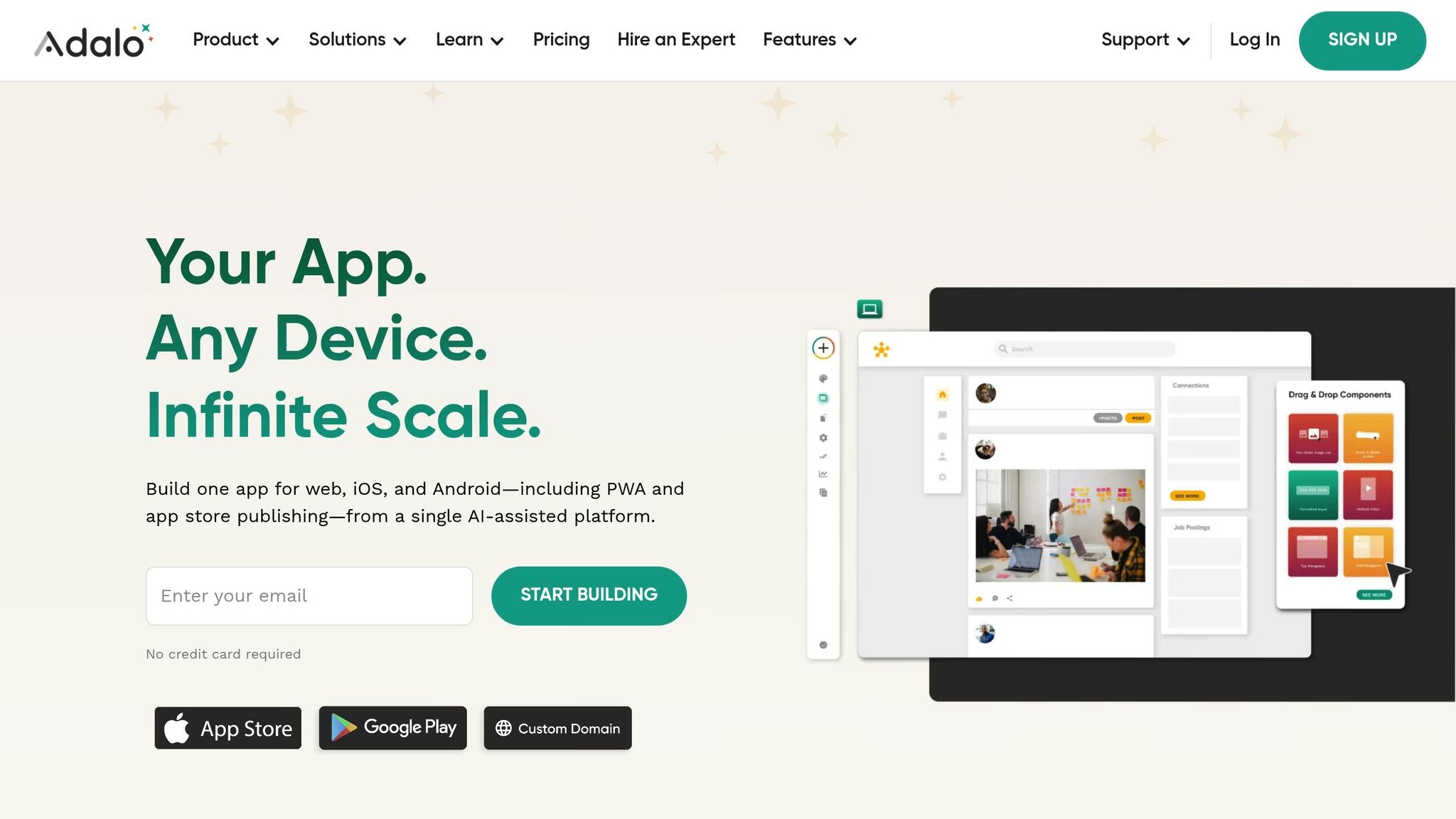

Platforms like Adalo simplify this process with AI-driven app creation, unified builds for web and mobile, and enterprise-grade features like SSO and RBAC. Whether you’re building dashboards or approval workflows, starting with scalable architecture ensures your tools grow with your business - without costly overhauls.

Planning for Scalability from the Start

Custom Development vs App Builder Platforms Comparison for Internal Tools

Scalability problems often sneak up on you. They don’t usually appear during development but show up when your internal tool is put to the test - like when user numbers spike, data triples, or another department starts relying on the system. By the time these issues arise, fixing them can mean rewriting large chunks of code, migrating databases, or even reverting to manual processes.

The best way to avoid these headaches? Start planning for scalability before you write a single line of code. Early on, define both functional and non-functional requirements. This means not just figuring out what the tool needs to do, but how it should perform under pressure. For example, consider expected user loads, peak request rates, and even seasonal traffic patterns. If your tool is designed for onboarding, think ahead: what happens when your company doubles its workforce in six months? Planning for growth from the beginning ensures your tools can handle the pressure as the organization expands.

Another key strategy is designing independent scale units. If your tool handles multiple functions, like inventory and customer support, make sure a surge in one area doesn’t slow down the others. Map these units to critical workflows to ensure that any issues stay contained and don’t ripple across the system.

Performance thresholds are another must. For instance, queries that take longer than 5 seconds frustrate users, and trying to display over 1,000 rows in a client-side table can cause performance hiccups. Address these limits during the design phase to avoid complaints later. As Microsoft wisely points out:

"Whenever possible, use industry-proven solutions instead of developing your own."

It’s also important to stay on top of technical debt. While some debt is inevitable, you can manage it by scheduling regular tasks to address platform updates before they turn into emergencies. Establish governance early, standardize your version control and deployment processes, and prioritize testability. This way, your tools grow with your business instead of becoming a bottleneck that could cost you up to 28% of potential revenue.

Mapping Workflows and User Requirements

Start by talking to the people who will actually use the tool. Conduct interviews across teams to understand their daily tasks, challenges, and what they really need from the system. Don’t just take requests at face value - observe how they work. For example, a sales team might ask for a data-heavy dashboard, but they may only use a handful of key metrics that need to load quickly.

Determine whether users need full CRUD functionality (Create, Read, Update, Delete) or just visual analysis. CRUD-heavy tasks, like managing invoices, require forms and table interfaces. In contrast, teams looking for insights - like executives reviewing quarterly performance - benefit more from clean, fast-loading visualizations.

Capacity planning also plays a big role. Don’t guess how many users your tool will support; calculate it. If you currently have 200 employees but expect to add 500 more next year, design for 700 concurrent users - not just today’s numbers. Also, consider geographic distribution. While availability zones usually keep latency under 2 milliseconds, global teams may need a different approach to ensure smooth data synchronization.

Workflows evolve, too. A simple two-person expense approval process at a startup might grow into a multi-department workflow. Building modular components that can adapt without rewriting the core logic makes it easier to adjust when new steps or regulations come into play.

Finally, focus on delivering only the data users actually need. Overloading interfaces with unnecessary information slows performance and clutters the experience. Role-based views can help keep things streamlined, ensuring fast load times even when hundreds of users are accessing data simultaneously.

Once you’ve mapped out user needs, it’s time to decide whether to build a custom tool or use an app builder platform.

Build vs. Buy: Making the Right Choice

With workflows defined, you’ll need to weigh the pros and cons of custom development versus app builder platforms. Custom development often requires a large engineering team, a bigger budget, and months to deliver a single tool. On the other hand, app builder platforms can cut that timeline dramatically - some tools can be created in under an hour.

Cost is another factor. Custom tools come with ongoing maintenance expenses, including updates and security fixes. App builders, however, offer predictable, subscription-based pricing.

| Factor | Custom Development | App Builder Platforms |

|---|---|---|

| Development Speed | 1–2 months on average | 30–60 minutes for operational tools |

| Maintenance | High; requires dedicated engineering | Low; provider handles updates |

| Scalability | Manual scaling | Automatic, cloud-native scaling |

| Cost Structure | High upfront and ongoing costs | Predictable subscription pricing |

Custom development makes sense when your tool needs to meet highly specific technical requirements that off-the-shelf platforms can’t handle. But for most internal tools - like dashboards, approval workflows, or data entry systems - app builders are faster, cheaper, and easier to scale.

Integration is another critical consideration. Check whether a platform offers open APIs and pre-built connectors for your existing tools, like Microsoft 365, Slack, or legacy databases. Platforms like Adalo can integrate with data sources such as Airtable, Google Sheets, MS SQL Server, and PostgreSQL. They even support systems without APIs through DreamFactory integration [blue.adalo.com]. This prevents data silos and avoids the "integration debt" that often comes with custom solutions.

Lastly, evaluate the platform’s admin capabilities. Can department heads manage their own content and permissions without IT’s help? And make sure the platform supports unlimited concurrent users, not just total users, to avoid crashes during high-traffic events like all-hands meetings.

Designing for Scale

When it comes to creating tools that can grow with your needs, modular design is the key. By breaking your tool into separate modules - like UI, business logic, and data - you can make updates or changes without impacting the entire system.

Picture it like building with LEGO bricks instead of carving a sculpture from one solid block. This method, known as loose coupling, ensures each part of your system communicates through clear interfaces rather than being tightly bound together. This way, you can replace or upgrade individual components without tearing everything apart. For older, monolithic systems, you can gradually shift to modular services using the "Strangler" pattern.

Another advantage of modular design is reusability. Encapsulate logic and UI elements so they can be reused across your applications. This approach reduces redundancy and simplifies updates - fix a component once, and the changes automatically apply wherever it’s used. Pair this with a standardized design language - consistent navigation, color schemes, and naming conventions - and you’ll not only make onboarding easier for users but also boost developer efficiency. These strategies align with earlier capacity planning efforts, helping your system handle growing user demand without slowing down.

Performance matters, too. Keep data payloads under 1.6MB and ensure queries run in under 3 seconds. If your tool needs to display large datasets, use server-side pagination to avoid loading everything at once. Tables that rely on the client-side can handle up to 1,000 rows comfortably but start to lag beyond 5,000 rows.

Modular Design and Component Reusability

Expanding on the modular approach, designing for component reusability can significantly reduce maintenance headaches. By breaking your system into small, independent units, you can test, update, and deploy each module without disrupting the entire application. This is especially useful for tools that handle multiple tasks - like managing inventory while supporting customer service - ensuring one function doesn’t bog down the other.

When updates or bug fixes are needed, you modify the module, and every app using it benefits immediately. Standardization plays a crucial role here. Consistency in navigation, button placement, and color schemes makes it easier for users to switch between tools without needing to relearn interfaces. For developers, starting with standardized templates ensures every new tool is built on a secure, compliant foundation, complete with pre-approved permissions and deployment settings.

For more complex tools, consider splitting single-page applications into multi-page architectures. This approach improves load times and simplifies maintenance, especially when different teams are responsible for various sections of the tool. Shift heavy tasks like data transformations, filtering, and sorting to the server side to keep the client-side running smoothly.

| Performance Metric | Moderate Impact Threshold | Severe Impact Threshold |

|---|---|---|

| Query Payload Size | > 1.6 MB | > 3 MB |

| Query Runtime | > 3 seconds | > 5 seconds |

| Client-Side Table Rows | > 1,000 rows | > 5,000 rows |

| Transformer Runtime | > 200 ms | > 500 ms |

Role-Based Access Control and Security

While modular design ensures scalability, Role-Based Access Control (RBAC) keeps your system secure as it grows. Managing individual user permissions can quickly become overwhelming, but RBAC simplifies this by grouping users into roles like "Sales Rep", "Manager", or "Admin." Permissions are assigned to roles, not individuals, so when someone changes teams or joins, you only need to update their role to adjust their access. This approach also protects your system from unauthorized changes.

Centralize user authentication with Single Sign-On (SSO) solutions like Okta or Microsoft Active Directory. These tools streamline login processes and enforce the Principle of Least Privilege, ensuring users only have access to what they need to do their jobs. For example, a sales rep might only view customer data, while a finance manager can edit invoices but won’t see HR records.

To enhance security further, define scope policies based on risk. Low-risk actions, like sending messages, can be unrestricted. Moderate-risk tasks, such as accessing customer data, might require approval, while high-risk actions, like deleting databases, should be limited to a small group of trusted admins. Automate security audits to flag permissions that are too broad or detect unauthorized users.

Auditability is essential as well. Every action should be traceable to a specific user and role, making it easier to monitor access and meet compliance standards as you scale. Use separate Development, Testing, Staging, and Production environments to ensure live data remains secure. For external API integrations, avoid hardcoding tokens; instead, use secrets management tools like HashiCorp Vault or AWS Secrets Manager to manage credentials securely.

| Feature | Individual Permissions | Role-Based Access Control (RBAC) |

|---|---|---|

| Management Effort | High; requires manual updates for every user. | Low; permissions updated by role/group. |

| Scalability | Poor; unmanageable as user count grows. | High; supports large user bases easily. |

| Security Risk | High; prone to errors and "permission creep." | Low; enforces Least Privilege principle. |

| Auditability | Difficult; hard to track user access. | Simple; access tied to defined roles. |

Integrating with Existing Data Sources

The usefulness of your internal tools depends heavily on the data they can access. Many organizations have crucial information locked away in databases, spreadsheets, or older systems that predate modern integration standards. The real hurdle isn’t just connecting to these sources - it’s doing so in a way that can grow with your team and handle increasing data volumes. Here’s a look at some effective methods for integrating diverse data sources into a scalable tool environment.

Connecting to Databases and Legacy Systems

Start by examining the systems you already have. Modern app builders can directly connect to popular databases like PostgreSQL, MS SQL Server, MySQL, and even cloud-based tools such as Airtable and Google Sheets. This eliminates the need to duplicate data or maintain outdated copies. When linking to databases, focus on querying only the fields you need. This keeps payloads manageable and ensures quick response times, which are crucial as your user base and data grow.

Legacy systems, however, can be trickier. Many older enterprise resource planning (ERP) systems and mainframes were built long before APIs became standard. Without APIs, you’ll need alternative integration strategies. Here are a few options to consider:

- Database-level integration: Directly query the legacy system's database for fast access. However, this method can be fragile - any changes to the database schema might disrupt your connection.

- File-based integration: Use CSV or XML exports for batch updates. This is ideal for nightly updates when real-time synchronization isn’t critical.

- Robotic Process Automation (RPA): Simulate user interactions with systems that lack programmatic access. While effective for some tasks, this method is prone to breaking with even minor changes to the user interface.

"Screen scraping is highly brittle and demands constant maintenance." - Dheeraj Vema

A more scalable solution is to wrap legacy systems with APIs using tools like DreamFactory. This creates a modern interface layer, allowing these older systems to function as standard endpoints without altering their core structure. Adalo, for instance, integrates with DreamFactory through Adalo Blue, enabling teams to pull data from legacy systems directly into their apps.

When choosing an integration method, think about whether you need synchronous or asynchronous communication. Synchronous methods, like "Request and Reply", are best when users need immediate responses. Asynchronous methods, like "Fire and Forget", work better for background processes and help reduce traffic bottlenecks during peak usage.

Unified Updates Across Platforms

Once your data sources are connected, the next step is ensuring updates are consistent across all platforms. For instance, if a sales rep updates a customer record on their phone, that change should instantly show up on the web dashboard and in reports. Traditional approaches often require separate builds for iOS, Android, and web, which can result in "logic drift" as each platform handles data differently.

Single-codebase platforms eliminate this issue. With Adalo, for example, you only need to build your app once. Any updates to the database, business logic, or interface automatically apply across web, iOS, and Android. This unified approach not only saves time but also ensures users see consistent information, regardless of how they access your tool.

To maintain speed and reliability, push heavy data transformations to the server. Shared query libraries can further standardize how data is fetched, metrics are calculated, or records are filtered. This means that any update to these processes benefits all connected tools immediately.

"Applications should eliminate direct access to data, instead relying on automation and mechanisms to standardize data retrieval and modification." - Retool Well-Architected Framework

Optimizing Performance at Scale

As your team grows and internal tools handle increasing amounts of data, maintaining performance becomes essential. What works seamlessly for a small group can grind to a halt under heavy usage. The difference between a small-scale pilot and a fully scalable solution often lies in proactively identifying and addressing bottlenecks before they impact productivity. Here’s how to keep your tools running smoothly as demand scales.

AI-Driven Performance Analysis

Modern diagnostics help catch performance issues early, during the development phase. These tools analyze how your app behaves and highlight specific problems, such as oversized data payloads, slow-running queries, or browser resource bottlenecks. By pinpointing these issues, you can make fixes before they snowball into larger problems.

For example, modular design insights work hand-in-hand with performance diagnostics to ensure your tools stay responsive, even under heavy load. Small data payloads and efficient queries keep interfaces smooth, while large data transfers or slow processes can lead to sluggish user experiences. Something as simple as keeping browser-rendered tables at reasonable row counts can prevent scrolling issues caused by overloading the browser.

One key tactic is offloading resource-intensive tasks to the server. Server-side processing not only keeps the interface quick but also reduces the strain on user devices. Running queries in parallel - using methods like Promise.all() in JavaScript - can further optimize performance by allowing multiple data requests to process simultaneously instead of sequentially.

Caching and Auto-Scaling

After diagnosing bottlenecks, caching and auto-scaling provide powerful solutions to address them in real time. Caching stores frequently accessed data, eliminating the need to repeatedly fetch it from the database. This is especially useful for internal tools where certain dashboards or reports are accessed multiple times a day. For mobile apps, enabling caching options like "Cache page load" ensures field workers can continue using tools even in areas with poor connectivity.

Auto-scaling, on the other hand, dynamically adjusts your infrastructure to meet demand. For instance, during peak usage - such as Monday mornings or end-of-month reporting - additional server instances can spin up to handle the spike in traffic. Once demand decreases, those resources scale back down, ensuring consistent performance without wasting hardware capacity.

To handle high data volumes, scaling workflow processors is equally important. Increasing concurrency limits - for example, setting WORKFLOW_TEMPORAL_CONCURRENT_TASKS_LIMIT to 100 - prevents tasks from queuing up during busy periods. With these strategies, your tools can grow alongside your team, managing increased demands without constant manual intervention.

sbb-itb-d4116c7

Security and Governance at Scale

As internal tools grow in complexity, so do the security risks. Scaling securely means moving beyond isolated safeguards to integrated systems for identity and compliance. Without these measures, a single compromised credential could result in millions of dollars in losses and expose sensitive information. In 2024, the average cost of a data breach hit $4.88 million, with human error or negligence playing a role in 68% of incidents. For remote-centric organizations, the stakes are even higher, as breach costs tend to be more severe.

The key to secure scaling lies in centralized identity management. Implementing Single Sign-On (SSO) is a critical step to eliminate multiple credentials and reduce the chances of password-related errors. SSO solutions, such as Okta or Microsoft Active Directory, provide a single authentication point, streamlining security. However, effective identity management and permissions strategies are essential to fully address the challenges of scaling securely.

Single Sign-On and Permissions Management

SSO, when paired with Role-Based Access Control (RBAC), enforces the principle of least privilege. This approach ensures that users and services only have the permissions necessary to perform their specific roles. For example, a marketing analyst doesn’t need access to payroll data, and a field technician shouldn’t have the ability to modify inventory pricing. Limiting permissions in this way significantly reduces the damage a compromised account can cause.

Instead of granting direct access to raw data, users should be grouped into standardized permission levels. These levels might include categories like "Always allowed" for low-risk actions (e.g., viewing basic reports), "Requires approval" for sensitive access (e.g., customer records), and "Restricted" for high-risk administrative changes.

To further minimize risks, token rotation can automatically renew and expire credentials, narrowing the window of exposure for compromised tokens. Adding IP restrictions that allow access to internal tools only from authorized address ranges creates an additional layer of defense. Tools like AWS Secrets Manager or HashiCorp Vault help secure tokens by injecting them at runtime, avoiding the risks of hardcoded credentials.

Maintaining Audit Trails and Version Control

Secure access is just one piece of the puzzle. Transparent monitoring is essential for scalable governance. Comprehensive audit trails provide visibility into user actions, configuration changes, and data access. These logs should include metadata - such as who made the change, when it occurred, what was modified, and the originating IP address. Such detailed records are vital for compliance and for investigating potential security incidents.

For internal tool configurations, version control systems like Git are invaluable. Using Pull Requests for changes ensures that every modification undergoes human review before deployment. This process not only creates a clear history of changes but also allows for rapid rollbacks to a stable state if needed.

Rapidly growing organizations can also benefit from automated compliance monitoring to catch issues early. Tools like AWS Config continuously assess resource configurations against governance standards, flagging any violations automatically. For example, Snowflake implemented a centralized dashboard for managing user access to internal tools, which reduced errors and manual remediation tickets by 65%. Regular automated audits of app scopes and collaborators help ensure access remains aligned with organizational policies as teams expand.

Building Scalable Internal Tools with Adalo

Creating internal tools that can scale effectively requires a platform that combines speed with enterprise-level reliability. Adalo rises to the challenge with its AI-driven app generation and solid infrastructure, allowing teams to deploy functional apps in just days. By leveraging a single-codebase architecture, Adalo ensures that updates apply seamlessly across web, iOS, and Android platforms. This eliminates the fragmented user experiences that often complicate internal tool ecosystems, making it easier to maintain consistency and efficiency.

AI-Assisted App Generation

Adalo's AI Builder transforms simple natural language prompts into fully functional applications. Whether a team needs an inventory tracker or a field service management tool, they can describe the requirements, and the AI handles the rest - building everything from the database structure to user flows and screens. This process reduces manual effort, standardizes development, and minimizes the errors typically associated with traditional coding methods.

Maintenance tasks, which often drain developer resources, are also streamlined through AI. The platform automatically generates deployment notes and solution summaries, sparing teams from tedious documentation work. For example, if a team wants to add a new approval workflow, they can describe the feature in plain language, and Adalo integrates it directly into the app - no coding or complex configurations required.

Enterprise Features with Adalo Blue

Fast development is only part of the equation; enterprise-level security and integration are equally critical. Adalo Blue delivers these capabilities with features like Single Sign-On (SSO), Active Directory integration, and Role-Based Access Control (RBAC). These tools ensure centralized user management and enforce least-privileged access from the outset, avoiding the need for retrofitted security measures later.

Additionally, Adalo Blue simplifies working with legacy systems through DreamFactory, which enables teams to create unified dashboards even when dealing with outdated or API-limited systems. This means valuable data can be surfaced and utilized without the need for costly system overhauls.

Scaling Infrastructure for High MAU

Adalo's modular infrastructure is designed to handle growth, supporting over 1 million monthly active users with ease. By standardizing the software stack across all platforms, the platform ensures consistent performance no matter the scale. For organizations with particularly high usage demands, Adalo Blue offers dedicated infrastructure and on-premise deployment options to maintain reliability as user numbers climb.

To prevent performance issues, Adalo includes an AI-powered X-Ray feature that identifies bottlenecks during development. By catching these issues early, teams can avoid costly fixes post-launch and ensure a smooth experience for users, even at scale.

Conclusion

Creating scalable internal tools isn’t about guessing every future need - it’s about starting with the right building blocks. A solid foundation rooted in modular design, server-side performance optimization, and seamless integration with existing systems lays the groundwork for tools that grow with your organization. By standardizing governance frameworks, using role-based access controls, and keeping development and production environments separate, you're not just building tools - you’re crafting infrastructure that evolves without expensive overhauls.

The key to tools that thrive under pressure often comes down to early architectural choices. Strategies like the scale-unit approach and server-side pagination ensure applications remain fast and responsive, even when handling thousands of records. Industry leaders have shown how robust internal architecture can drive real results.

Platforms like Adalo make this process faster and more efficient. With enterprise-ready features like SSO, RBAC, and AI-powered performance analysis baked in, you can go from prototype to production in hours. Scale effortlessly to support over 1 million monthly active users, while tools like the AI Builder automate repetitive tasks, and X-Ray identifies performance issues before they become problems. By embracing these solutions, your internal tools can grow alongside your business, tackling challenges without missing a beat.

FAQs

How does modular design help create scalable internal tools?

Modular design simplifies the creation of scalable internal tools by dividing them into smaller, self-contained components. Each module can be developed, tested, and updated independently, making it easier to introduce new features or enhance functionality without disrupting the rest of the system. This approach ensures your tools can grow smoothly alongside your business needs.

Breaking tools into separate modules also boosts performance. Teams can pinpoint and resolve bottlenecks faster, fine-tune specific components, and allocate resources more effectively. Plus, modular design supports quicker, step-by-step development, enabling organizations to release updates or new features rapidly while keeping systems reliable and efficient. It’s a smart way to build tools that evolve effortlessly with changing demands.

What are the advantages of using Role-Based Access Control (RBAC) to secure internal tools?

Role-Based Access Control (RBAC) brings a range of advantages when it comes to securing internal tools. By tying access permissions to specific roles rather than individuals, it ensures that users can only interact with the data and features relevant to their job duties. This reduces the likelihood of unauthorized access, insider risks, or potential data breaches, giving organizations tighter control over sensitive information.

Another key benefit of RBAC is how it simplifies compliance. With clearly defined access policies, organizations can easily demonstrate adherence to regulations like GDPR, HIPAA, or ISO 27001. These policies are straightforward to audit, making regulatory requirements less of a headache. On top of that, RBAC improves operational efficiency. Instead of managing permissions for each user manually, administrators can assign them at the role level. This not only saves time but also ensures consistency and makes scaling easier as teams expand or roles shift.

In short, RBAC strengthens security, simplifies compliance efforts, and reduces the complexity of managing permissions, making it an essential tool for building secure and scalable internal systems.

Why is it essential to plan for scalability when building internal tools?

Planning for growth from the beginning ensures your internal tools can expand alongside your business without sacrificing performance. As user demands grow, data volumes increase, and operations become more complex, tools built to scale help avoid slowdowns, outages, or costly system overhauls.

By considering scalability early on, you set your tools up to handle future challenges, adjusting to evolving needs without wasting time or resources. This forward-thinking strategy keeps your operations efficient and supports smooth, uninterrupted growth.

Related Blog Posts